1. Introduction

1.1. Overview

Virtual Reality and Augmented Reality technologies have proven themselves to be valuable additions to the fields of digital entertainment, information and workspace, but solutions leveraging these technologies have required processing platforms that, in single-use scenarios, often negatively affected the cost-benefit equation. The platforms and equipment required to process and present immersive experiences have been expensive and often dedicated to the task at hand, as more and more realism is introduced the cost of ownership has slowed overall adoption.

The cost and complexity of computational systems in all fields has often followed a cycle wherein the earliest solutions demanded bespoke technology and significant resources to overcome lesser optimized algorithms while later services have benefitted from reusable general-purpose platforms. The industry-wide adoption of cloud technologies further reinforces the pay-as-you-go model which allows access to previously unattainable levels of processing that can now be used to present immersive experiences. Low latency, high throughput networks allow instantaneous access to remotely stored data while offering a local computing experience with data centre based system.

Cloud VR/AR brings together significant advances in cloud computing and interactive quality networking to provide high-quality experiences to those who were previously priced out of immersive technologies.

1.2. Scope

This document provides descriptive material on Cloud VR/AR, a high-level reference architecture that uses shared computational resources found cloud-based services and low-latency, high-capacity 5G mobile networks that provide interactive connectivity. The current state of the art is described, but it should be noted that innovation in technology continues to improve the overall solution space.

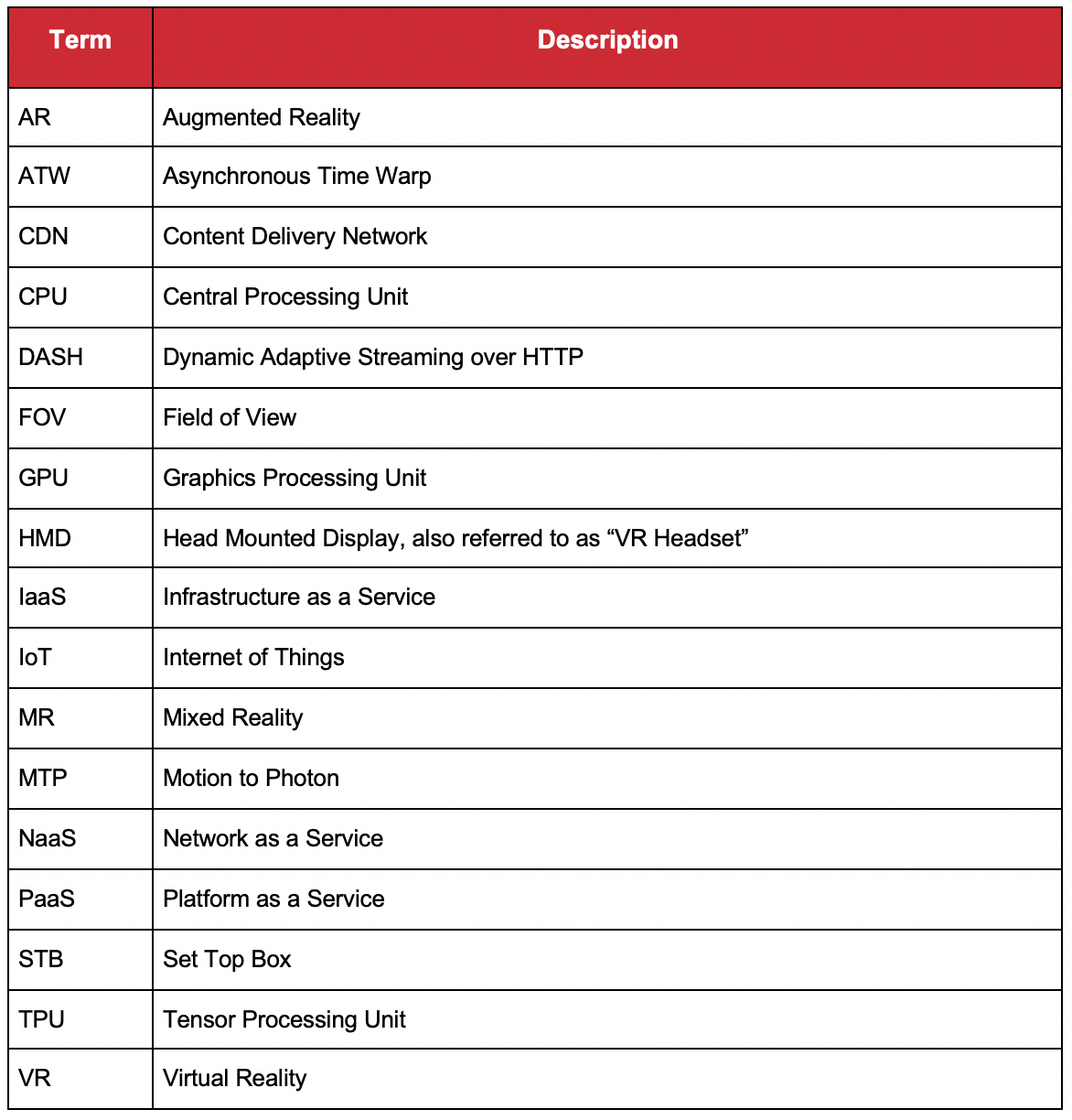

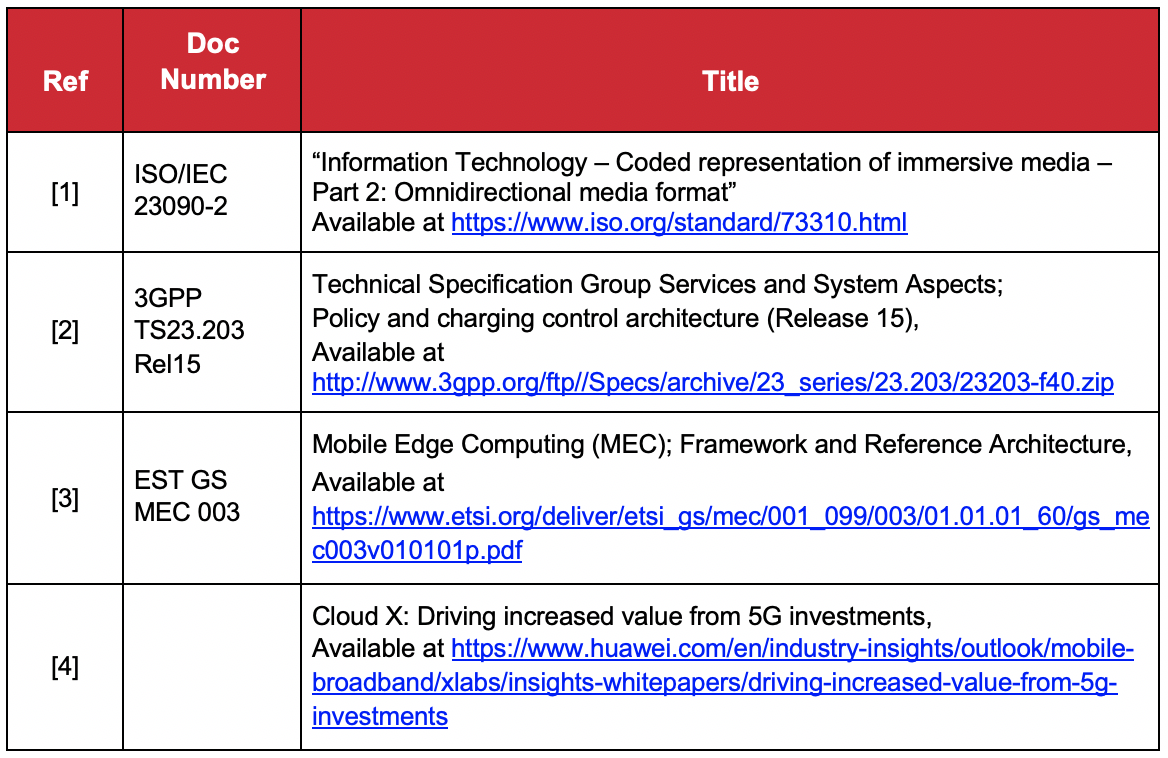

1.3. Abbreviations

1.4. References

2. Motivation: AR/VR Technology and Evolution from stationary to Cloud AR/VR

The last four years have seen considerable advances in both technology innovation and content quality within the virtual reality domain. These advances have helped create new solution verticals bringing additional immersive experiences in the consumer and professional domains. As the demands on virtual reality systems increase, we reach a point where current solution designs can no longer continue to scale and new approaches are needed for the future.

2.1. Terminology: Explaining AR/VR/MR, Cloud/Edge & Thin/Thick Client

“Virtual Reality” (VR) is an artificial rendering of an environment making use of audio and visual fields possibly supplemented with other sensory devices. Generally, two forms of VR exist

- Omnidirectional/Panoramic video – an approach in which a natural scene is captured with a special camera and microphone rig. Analogous to a traditional television system with an unrestricted viewing arc.

- Computer generated/Synthetic environment – leverages the use of data models and algorithms to create an artificial view based on the current pose and other input stimuli. VR based gaming environments are a typical example of this approach

Forms of Virtual Reality that mix naturally captured scenes with computer-generated elements are also possible.

“Augmented Reality” (AR) is a presentation method wherein objects in the real world are supplemented with artificial digital objects which can be either constructive (i.e. adding to the real world objects) or destructive (i.e. masking the real world object). The Pokémon Go[1] game release in 2016 is an example of a constructive AR experience wherein computer generated characters are overlaid into the real world view whereas an application that appears to show renditions of objects inside of another would be an example of destructive AR.

“Mixed Reality” (MR) environments merge elements of physical and virtual worlds into a single immersive experience that is generally depicted as an augmented reality experience.

“The Cloud” is a computing architecture whereby resources can be called upon an as-needed basis. Those resources include general and specific computing functions as well as persistent storage but may also include software functions. The key advantage of a cloud computing architecture is that it can accommodate any compute and storage requests made by an application. Early cloud deployments built out all resources into large data centres with high capacity networking for access, however recent demands for low latency interactions with cloud-based applications have seen resources move closer to the edge of the network. These Edge resources often work in unison with Core resources to accomplish tasks.

“Thin Client” is a device architecture whereby minimal functions are built into the terminal client. For VR and AR terminals these include the display screen, speakers for output, vision positioning and hand-controller sensors for input. A “thin client” approach uses the network to pass inputs to processing functions and receive the necessary images to display.

Contrastingly, a thick client includes all processing functions within the context of a single device. Content is either (i) retrieved from local storage and processed for rendering or (ii) data streams are obtained from network services for processing.

2.2. AR/VR today – use cases, deployments, ecosystem/market

Virtual Reality and Augmented Reality, whose heritage can be traced back to highly specialized and bespoke systems such as flight simulators and military heads-up displays, continues to find new applications in both the Enterprise and Consumer domains. The value of these technologies comes from being able to achieve or augment something in an artificial domain that could not be attained in the real world, and this value proposition carries on in different ways into new areas of consumption.

The enterprise domain seeks to find new approaches to increase workplace efficiency and productivity through applications such as virtual training systems, augmentation of manufacturing and enhanced remote collaboration. In addition, the professional services sector is embracing virtual reality for applications ranging from real estate to psychological counselling. Business models within the enterprise market are highly sensitive to the ownership of assets, especially those with a relatively high rate of depreciation. Enterprises look forward to turning heavy, local technology assets into light, cloud-based, shareable ones in order to optimize the current revenue structure.

Figure 1. Initial applications for VR in the enterprise domain

Figure 1. Initial applications for VR in the enterprise domain

Use of virtual and augmented reality in the consumer domain has an emphasis on entertainment, where gaming and 360° panoramic video have driven adoption. Social aspects are beginning to emerge within this domain to alleviate the sensation of isolation inherent in the general principles of virtual reality. Interoperable end-to-end ecosystems for immersive content production and delivery to end users is essential to establishing virtual reality as a mass market service and also change the content storage pattern from the local device to a cloud-based thin client.

Figure 2. Initial applications for VR in the consumer domain

Figure 2. Initial applications for VR in the consumer domain

2.3. AR/VR Evolution towards Cloud: Challenges and Solutions

2.3.1. Key challenges of today’s AR/VR services

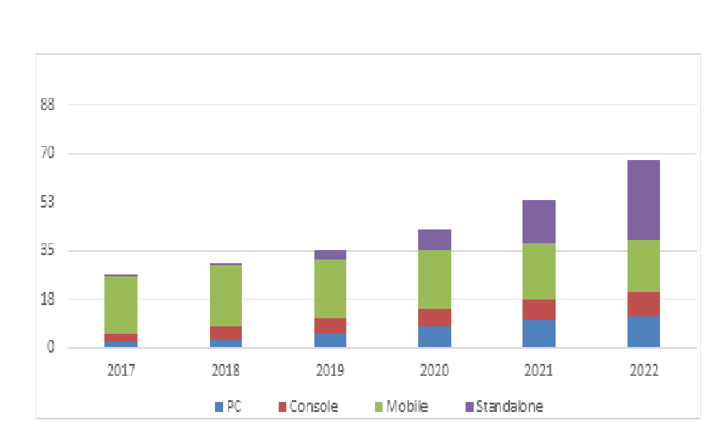

VR technology has made progressive inroads into the consumer and enterprise domains over the last few years. IHS Market forecasts that the global installed base of consumer VR headsets will reach 31 million at the end of 2018 and is currently expected to expand to 68 million by the end of 2022 [4]. This shows a good adoption progression but clearly falls short of mass market adoption.

Figure 3. World VR headset installed base by source platform (in millions)

VR technology adoption remains hindered for a variety of reasons:

- Viewing hardware and source device computing costs: Personal computer and console-based VR headsets remain expensive to buy and are additional to the source computing device required to play back the interactive VR content. This limits the addressable audience to those with powerful computing platforms which are typically not used in day to day personal computing. It can also be seen that while many enthusiasts have computers with significant processing and graphics capabilities, most people make use of laptop class devices which have these but at a lower performance level.

- Resolution and visual quality remain low: Display resolution of VR headsets is improving slowly, but still lacks the detail of today’s highest definition video. Even as hardware improves to support better resolutions, rendering interactive content at higher resolutions takes increased amounts of local computing power, which leads to a more expensive computing platform for the end user. Low visual quality in a VR experience reduces the lack of realism leading to less overall usage with shortened durations.

- Mobility remains an issue and impacts usability: VR headsets are typically wired to the computing device which impacts usability by restricting freedom of movement. Even with the use of local wireless solutions, which are expensive, the need to recharge those units reduces operational use while maintaining a separate piece of technology adds to the overall complexity.

2.3.2. Solution: The case of Cloud AR/VR

The most significant factor with Cloud AR/VR solutions is the transfer of the processing capability from the local computer to the cloud. A high-capacity, low-latency broadband network allows for responsive interactive feedback, real-time cloud-based perception, rendering, and real-time delivery of the display content. Cloud AR/VR is a new type of AR/VR that has the potential to alter the current business model and extends operators’ cloud platform services.

- Cloud VR solutions execute and render applications with cloud-based processing resources and stream the necessary view to thin client headsets. These devices will be significantly cheaper than thick devices and should also offer a longer lifespan as there are no local resource limits.

- When offloading the GPU-compute requirements to the cloud, the resolution of the viewport content could be optimized before streaming to end user headsets over 5G networks.

- Cloud-based rendering of content delivered to thin client headsets could support a more mobile implementation of VR and offer longer battery life.

Cloud AR/VR is not a single service. Instead, it represents a myriad of the most essential services in the 5G era, such as Cloud Gaming and Advertisement. These services are marked by the concept of “Cloud Applications, Broad Pipes, and Smart Clients”.

3. Solution: Cloud AR/VR – How does it work?

3.1. Cloud AR/VR requirements

3.1.1. AR service and technical requirements

Augmented Reality (AR) within this document refers to any technology to extend or enhance the perception of the real, physical world with digitally rendered information. The term “AR” in a narrow sense mostly refers to an overlay of visual information that is anchored in the real world (e.g. attached to the left corner of a specific object), while the terms “MR” or “Spatial Computing” usually refer to visual information that is perfectly merged into the real world surrounding, e.g. if a virtual object is partially hidden by a real-world object the virtual object is shown only partially. Real-time spoken translation of a real-world person or a spoken guide book adapting to the visual scene can be considered examples of audio augmented reality.

Use cases for AR are almost endless and it is expected that AR will become the future visual interface to digital information systems. Due to the immature state of AR, for reasons which are explained below, initial use cases have centred on consumer experiences such as gaming. As technology is evolving quickly there is a strong uptake in industrial AR applications such as retail, assembly, logistics and training. One of the first consumer AR applications that made a global impact was the game Pokemon Go[2] by Niantic. In 2017 Niantic claimed that the game was downloaded 750 million times and that 65 million people play it monthly around the world.

Today AR experiences are mostly consumed through AR enablers incorporated into smartphones and tablets. This is primarily due to the unavailability of affordable head-worn AR devices (“AR glasses”) that provide a good user experience. Available AR glasses such as Microsoft´s HoloLens[3] or MagicLeap’s One[4] are expensive and limited in capabilities, however, they are the first iteration of head-worn AR devices that will ultimately provide the full AR experience. Significant usability lessons have been learned from mobile and head-worn AR devices leading to a new phase of devices with more relevant features and improved ergonomics.

The major difference to VR is that since AR blends digital information with the real world it requires an understanding of the physical environment around the user in real-time. The accuracy of the real world understanding determines also the level of AR that a service can provide. If you want to render e.g. a virtual object on a real table you need to have not only an accurate understanding of the table geometry and position within the real worlds coordinate system but also about real objects on the table that may partially hide the virtual object. The real object may be e.g. semi-transparent (a bottle of water) which needs to be understood (and reflected in the rendering of the virtual object as well). Additionally, for an exact visualization of the virtual object, the current lighting conditions of the environment and specifically the reallocation of the virtual object needs to be considered.

In short, while VR is about rendering an existing, completely virtual, model according to the user´s position, AR additionally requires an understanding of, and adaptation, to the real-world scene in real-time, necessitating the need for additional sensors, scene recognition and computation. Today’s mobile AR solutions typically utilize libraries such as ARCore[5] from Google or ARKit[6] from Apple that provide the required understanding of the real-world and also ensures the proper rendering of the virtual objects according to that understanding (see Table 1 below). As these libraries typically run on mobile hardware platforms the capabilities are limited. To overcome those limitations and enable true AR experiences independent of the end user devices cloud-based AR can be used.

Table 1. Comparison of Unity’s AR Foundation, ARCore and ARKit[7]

Table 1. Comparison of Unity’s AR Foundation, ARCore and ARKit[7]

While cloud-based VR focuses on rendering the virtual scene based on user input (relative position/movement within the virtual world) with low-latency, cloud-based AR additionally requires services to analyse incoming sensor data (such as a stereo-camera feed to create a 3D map of the surrounding) requiring different computation models. Depending on the level of AR desired, that computation ranges from computer vision based algorithms to AI/ML-based algorithms which understand the semantics of the real world.

This implies two major differences between cloud-based AR vs. cloud-based VR:

- The upstream bandwidth for the sensor input to understand the real-world is significant and can be as high or even higher than the downlink requirements; therefore the network architecture to support cloud-based AR needs to cater for that

- The cloud infrastructure must not only be prepared for high-performance rendering but also for general computer vision algorithms as well as AI/ML which may require different types of underlying acceleration hardware, e.g. specialized AI processors like Google’s TPUs[8].

The latency requirement for the overall end-to-end chain (usually referred to as Motion-to-Photon (MTP) latency) in VR is commonly targeted at 20 ms (more details in the following chapters). However, in AR the requirement is even stricter as visual changes are not only triggered by the motion of the user but also by any change (e.g. lighting or natural object movement) in the surrounding world. Some sources identify the limit for a perfect AR experience at 5 ms[9], to accommodate this requirement the overall chain needs to be carefully designed and optimized, i.e.

- Environment sensing (cameras, depth sensors, …) and pre-processing of the data to prepare for submitting to an edge cloud; pre-processing can be any form of processing the raw sensor data, for example, to reduce the data volume to be sent to the cloud; the drawback is that on-device pre-processing consumes energy and power or requires bespoke hardware

- Input tracking (inertial movement, input devices, eye-tracking, …) and pre-processing of the data; this should run in parallel with the environment sensing

- Transmission of environment and input tracking data to the edge cloud (upstream)

- Analytics of the environment and input tracking data to update the real world model and trigger reaction to the user input

- Rendering of the virtual objects

- Transmission of the rendered data to the end device (downstream)

- Display of the virtual objects on the end user device

In order to reduce perceived latencies, technologies such as warping, i.e. correcting the rendered image in the last stage based on an updated pose estimation, can be deployed. Also, it should be noted that some steps can be parallelized.

3.1.2. VR service and technical requirements

VR business applications are very diverse, including watching film and television, games, education, e-commerce, travel, medical and so on. According to the application scenario classification, from the perspective of the audience, these applications can be divided into those targeted for business and industry use and those targeted to individual consumers.

From the perspective of perceived experience, VR experiences can be divided into 3DoF (degrees of freedom) VR services and 6DoF VR services. 3DoF permits rotational user movement around the x, y, and z-axes where the centre of their head denotes (0, 0, 0) thus allowing the user to look around from a single fixed viewing point. 6DoF permits both rotational and translational movements within a volumetric space and thus allowing the user to freely traverse a VR scene.

The essence of Cloud VR is “put content into the cloud, put computing into the cloud”, which has certain dependencies on network capabilities and computation for rendering. Therefore, at the current stage, we advocate dividing Cloud VR services as 2 categories according to the form of business interaction: weak interactive services and strong interactive services.

Typical weak interactive services include: Cloud VR on demand and Cloud VR live broadcast; typical strong interactive services include: Cloud VR communication and Cloud VR games;

3.1.2.1. Weak interactive VR services

For VR scenes that have low levels of interactivity, such as VR live broadcast or VR movies, the following aspects need to be considered to ensure a high-quality experience:

- Promote the use of lightweight cameras and VR Head Mounted Displays (HMDs), reduce terminal cost and power consumption,

- Improve the portability and mobility of cameras and terminals to achieve VR experience anytime, anywhere. This can only be attained through close cooperation between the terminal, the network and the cloud.

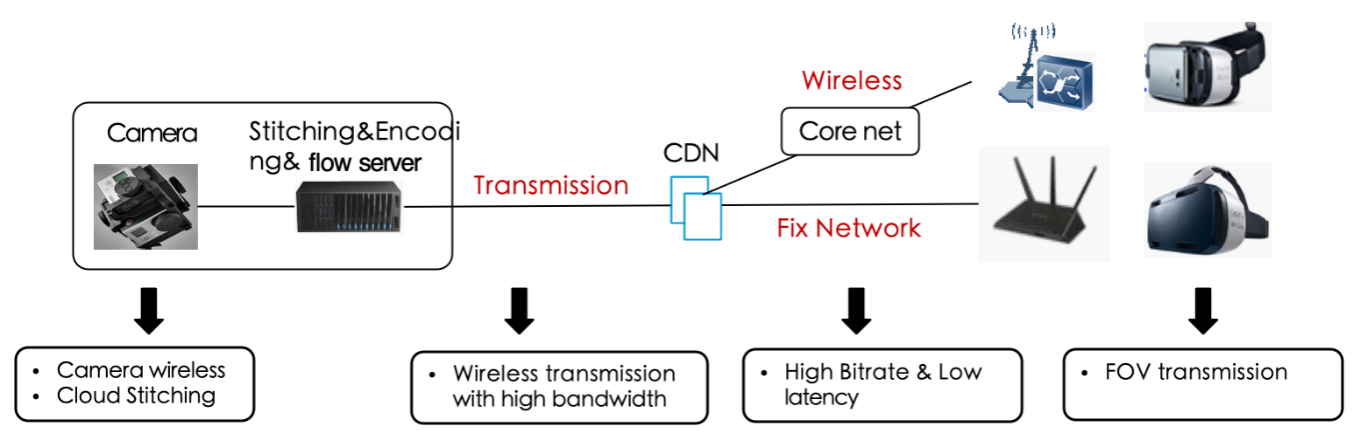

Consider VR live broadcast as an example whose current approach is shown in Figure 4.

Figure 4. Solution scheme for VR live broadcast

Figure 4. Solution scheme for VR live broadcast

In order to make the camera and HMD lightweight and low-cost, it is necessary to simplify the original camera and VR terminal processing and to offload some of the complex processing from the end side to the cloud. The specific options that can be taken are as follows:

- Relocating the functions of CPU and GPU to the cloud, such as splicing projection and encoding, so that the camera only retains the functions of shooting and video uploading.

- The camera introduces a mobile network for wireless uploading of video to meet the requirements of some mobile mobility requirements (such as drone shooting) and difficult to cover wired transmission (such as outdoor).

- Introducing the FOV scheme, the VR 360° video is segmented according to the perspective. The user does not need to download and decode the 360° video from the full view. The corresponding video segment can be obtained in real time according to the current perspective and correspondingly decoded to reduce the transmission bandwidth and decoding capability.

3.1.2.2. Strong interactive VR services

Cloud VR gaming is a typical example of a strong interactive VR service. At present, VR games have the following pain points:

- HMD + mobile phone or integrated HMD solution: limited terminal chip processing capability, can only run some mild VR games, game experience and control (general 3DOF) is poor, it is difficult to form attractive.

- HMD + PC-based solution: With PC powerful CPU/GPU, complex game processing and rendering can be realized, providing a better VR experience, but the whole set is large, not portable, and costly.

These problems have greatly limited the popularity of VR games.

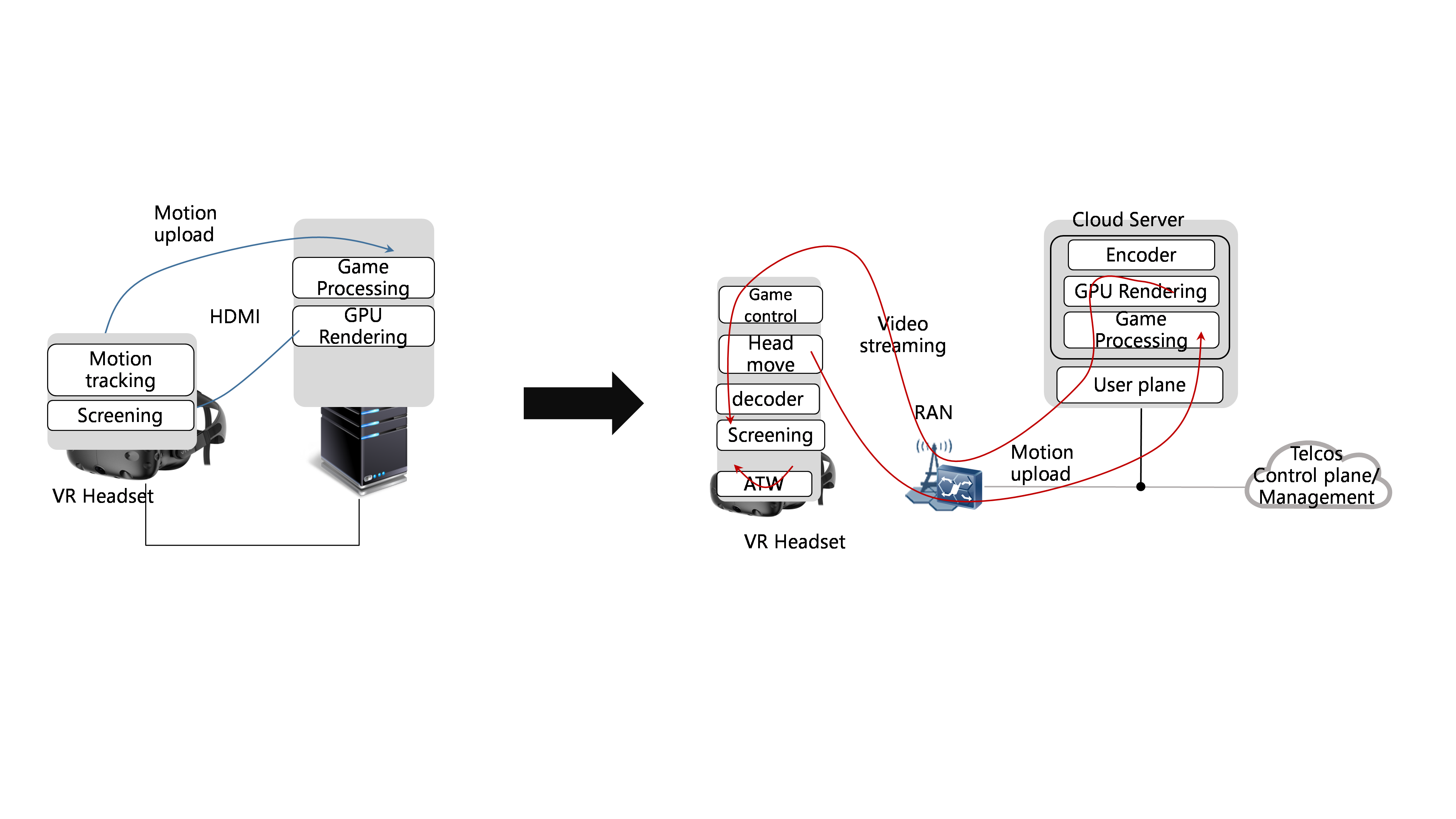

In order to solve this problem, it is a better solution to offload complex game processing and rendering to the cloud.

Figure 5. Migration of local processing to the cloud

Figure 5. Migration of local processing to the cloud

The specific plan would be as follows:

- Deploy game processing and rendering in the cloud: VR HMDs only need to complete motion capture and display, reducing the processing complexity and cost of the consumer equipment.

- The cloud game host includes the encoding module and the terminal contains the decoding module: encodes and decodes the original picture of the game, and solves the problem that the original picture rate and frame rate are high, the bandwidth is even up to several gigabits per second, and it is difficult to transmit directly through the mobile network.

- The HMD adopts the asynchronous insert frame, such as Asynchronous Timewarp (ATW) technology, and directly generates the intermediate frame according to the local motion of the front frame according to the head motion, and ensures the MTP delay of less than 20 ms, avoiding the additional network transmission and the codec delay. The network side game screen cannot be sent to the vertigo feeling caused in time.

3.1.2.3. Network bandwidth and latency requirements

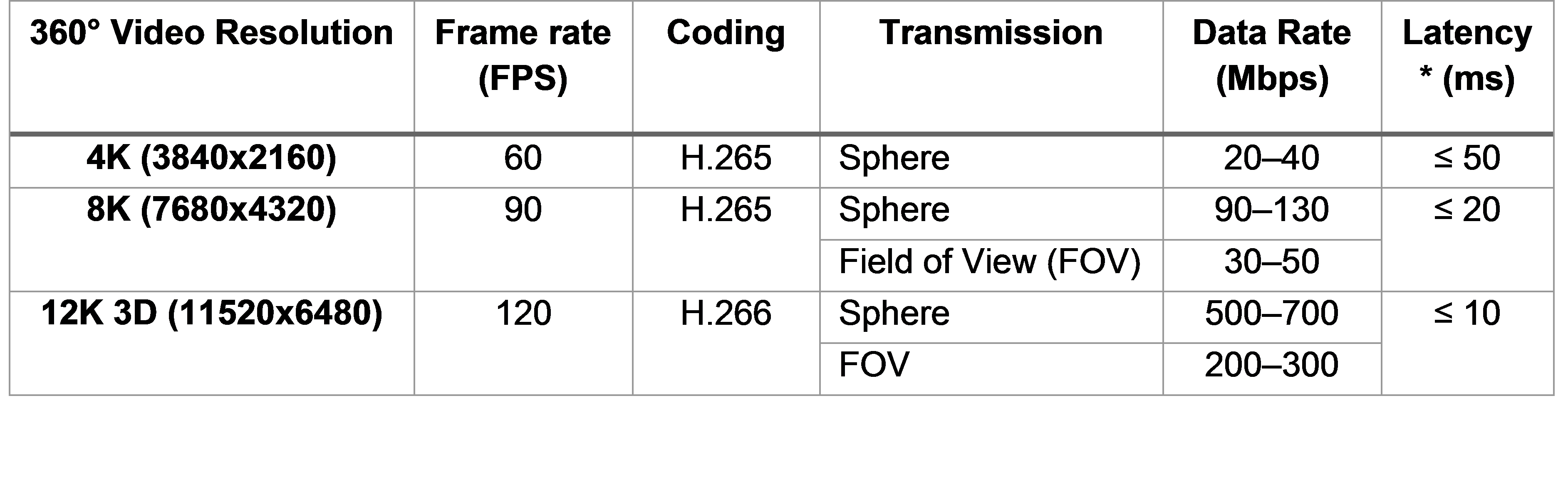

Currently, 4K panoramic video only requires 20 to 40 Mbps data rate and 50 ms latency. As 5G networks contribute to considerable improvement in data rate (more than 100 Mbps) and latency (less than 10 ms), users will enjoy more comfortable viewing experiences.

Table 2. Network data rate and latency requirements of 360° Cloud VR video

Table 2. Network data rate and latency requirements of 360° Cloud VR video

*Note: The latency in the table indicates the round trip time (RTT) latency requirement on the radio access network (RAN) side. The rendering and encoding/decoding latency is not included.

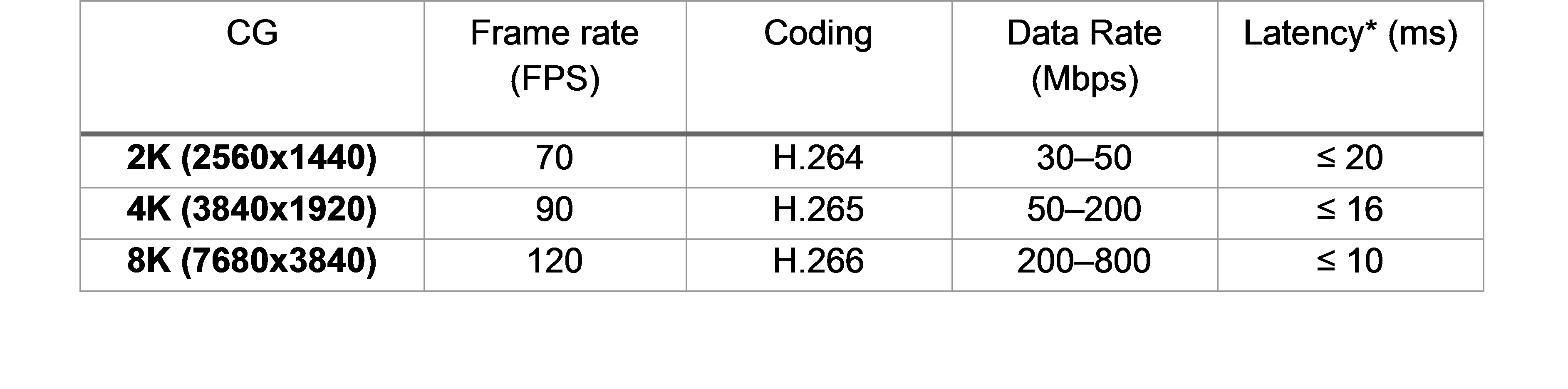

CG Cloud VR delivers 2K services in its infancy. For better visual experience, the image resolution has to be 4K or 8K. In addition, the feature of strong interaction turns the CG Cloud VR services to be more latency-sensitive.

Table 3. Network data rate and latency requirements of CG Cloud VR

Table 3. Network data rate and latency requirements of CG Cloud VR

*Note: The latency in the table indicates the round trip time (RTT) latency requirement on the RAN side. The rendering and encoding/decoding latency is not included.

Operators can selectively launch 360° VR or CG VR services based on their own service and network capabilities. Since 360° VR video is a derivative of video services, operators equipped with traditional video platforms can swiftly run such VR services at low cost. In order to deploy CG VR services, which have high requirements on GPU rendering and streaming, operators have to add GPU resource pools to further supplement their existing cloud platforms and data centres.

3.2. An End-to-End service architecture for Cloud AR/VR

3.2.1. Architecture Overview

The service general architecture for Cloud AR/VR is depicted in the following figure. The computational aspects of the AR/VR experience are processed in the Cloud Edge with the visual experience delivered to the user terminal via a low latency, high capacity, and guaranteed throughput 5G network connection. That same 5G network connection also transports the input sensor messages from the terminal to the application processing.

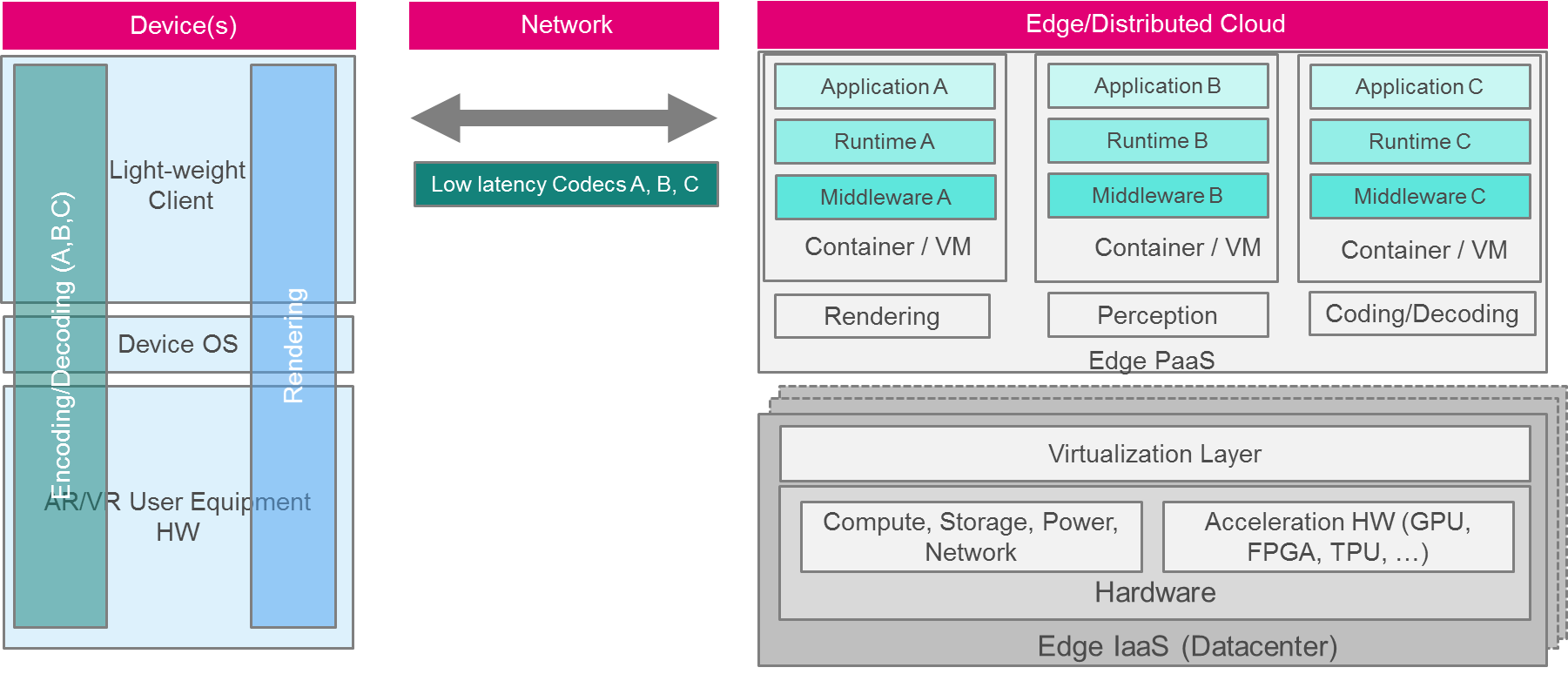

Figure 6. Cloud VR/AR Reference Architecture

Figure 6. Cloud VR/AR Reference Architecture

The lightweight client function in the terminal device provides the minimum set of capabilities that need to be present for rendering the VR/AR experience, with all additional functionality moved to the Cloud. As there is still a significant amount of data exchanged between the cloud-based processing functions and the user’s terminal, the use of low latency coding techniques is required – even if these come with less than optimal compression factors.

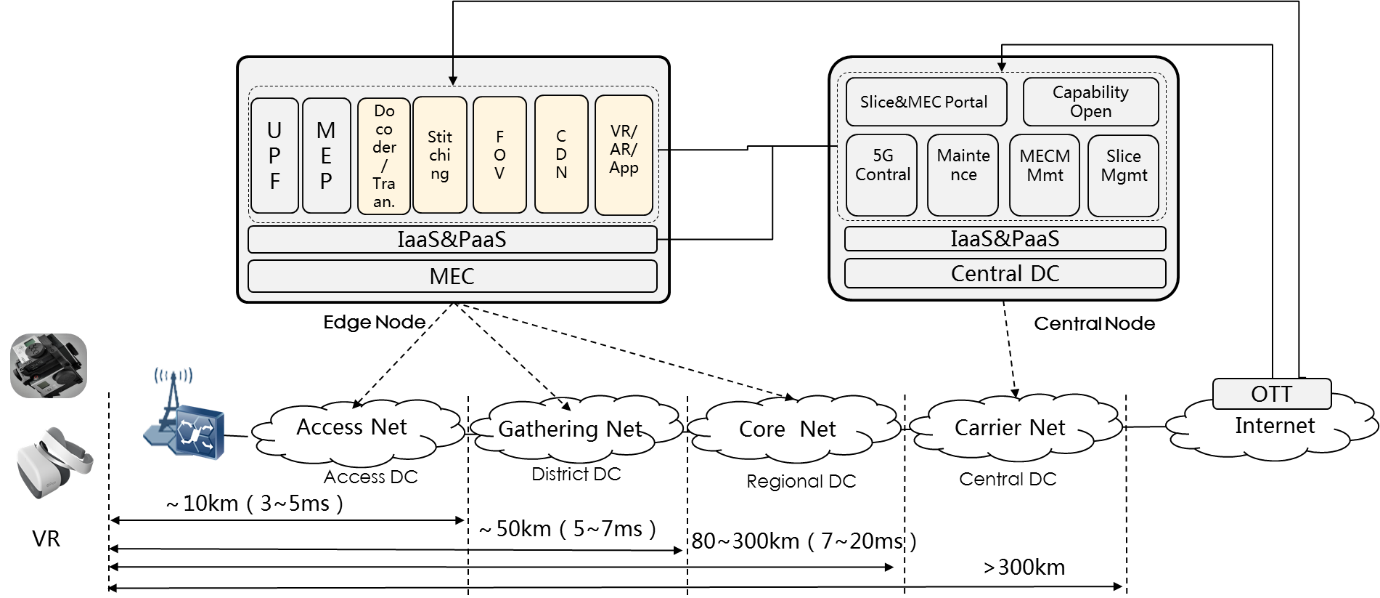

Different Cloud AR/VR services require that the network can load and manage different services and applications easily. Different Cloud AR/VR services and applications are deployed in different network locations as needed with edge computing. Overall network latency has a direct relationship with the length of a signal path and the number of intermediary routing/switching elements within that patch. The following figure highlights the impact of end-to-end latency as network side functions are moving closer to the edge of the core network.

Figure 7. Cloud VR/AR Network Latency Aspects

The cloud architecture is divided into two layers:

- The central node mainly completes the control plane function and management plane function of the 5G network: specifically, control plane signalling, service flow processing, policy scheduling and capability opening, and network operation and maintenance, node management and slice management. These aspects are generally session establishment related and do not impact the interactive nature of the VR experience

- The edge node mainly completes the user plane function and the edge computing platform function; The edge computing platform is responsible for providing deployment and governance of VR and AR related media and vision capability services. In order to better implement the deployment and governance of edge services and applications, edge-side nodes can be divided into edge hardware, IaaS, PaaS, Cloud AR/VR enabled, and Cloud AR/VR applications.

3.2.2. Existing Standards Overview

ISO/IEC 23090-2 OMAF [1]: Omnidirectional Media Application Format. It specifies the omnidirectional media format for coding, storage, delivery, and rendering of omnidirectional media, including video, images, audio, and timed text. In an OMAF player, the user’s viewing perspective is from the centre of the sphere looking outward towards the inside surface of the sphere.

ETSI GS MEC 003 [3]: The Multi-access Edge Computing (MEC) initiative is an Industry Specification Group (ISG) within ETSI. The purpose of the ISG is to create a standardized, open environment, which will allow the efficient and seamless integration of applications from vendors, service providers, and third-parties across multi-vendor Multi-access Edge Computing platforms. The group has already published a set of specifications focusing on management and orchestration of MEC applications, application enablement API and service Application Programming Interfaces. The ISG also actively work to help enable and promote the MEC ecosystem by hosting Proof-of-Concept (PoC) and MEC Deployment Trial (MDT) environments as well as supporting and running Hackathons.

3.2.3. IaaS

The basis of the end-to-end AR/VR Cloud service delivery is cloud resources which are commonly offered through an infrastructure-as-a-service (IaaS) model. This model allows an abstraction of the actual physical infrastructure and provides resources on-demand. A separation of the IaaS and higher layers (see PaaS below) enables different technical but also business models including those for operators providing their own, possibly distributed, IaaS.

The IaaS for cloud AR/VR may be shared with other non-VR scenarios to make optimal use of cloud economics. Depending on the specific requirements towards the IaaS to be used (which are further described below), the physical hardware and IaaS stack may be owned and operated by an existing public cloud provider such as Amazon Web Services[10], Microsoft Azure[11] or Google Cloud Platform[12], by operators who provide their own edge compute (i.e. distributed IaaS) infrastructure, or by other entities which provide local compute infrastructure. Single Cloud AR/VR Services may even utilize different IaaS’ providers for different parts of their applications depending on the individual, different requirements (e.g. in terms of latency). This creates a certain complexity, which needs to be addressed by the upper layers of the architecture, especially the Platform-as-a-Service (PaaS) layer as described within this document.

A distributed IaaS may serve different users: internal services (realized as Virtual Network Functions – VNFs), internal higher-level serves (such as operator-owned AR/VR services) as well as external services. Depending on the type of services being hosted, different requirements as outlined below apply.

3.2.3.1. Technical Requirements

An IaaS for Cloud AR/VR services needs to fulfil specific requirements to deliver for the respective use cases. Those requirements include

- Connectivity from user device towards the IaaS (further discussed in chapter 2.7) needs to be able to deliver a latency and jitter below a specific threshold (use-case dependent but for interactive AR/VR the network end-to-end latency should be below 10 ms; see QCI 80 as introduced in 3GPP TS23.203 Rel15 [2]). In order to address latency/jitter requirements from a transport network perspective the concept of edge computing is used, i.e. deploying the IaaS in close proximity (in terms of network topology) to the user equipment to shorten the signal propagation delay (as a rule of thumb light in fibre takes 1 ms RTT per 100 km distance).

- The IaaS needs to provide a specific compute capacity in terms of processing, storage and network but also in terms of GPU, FPGA, TPU and other required resources. While the specific demand is driven by each use case, a common requirement is to virtualize all physical resources in a way that allows efficient sharing of that resource among different users. Note that compute capacity also includes the requirements to provide a specific compute latency for a given service as it adds up to the overall end-to-end latency of the service, for example, if your compute latency for a given service already exceeds 10 ms you won’t be able to deliver an end-to-end latency of below 10 ms. In order to optimize performance especially for latency for the IaaS Data Centre (DC) SmartNICs can be considered as well.

- The interface to access the IaaS (which regularly contains an API to create virtual machines or deploy containers) should be standardized so that the effort for a PaaS layer integrating that infrastructure is minimized as much as possible.

- Support for state-of-the-art security mechanisms, i.e. full separation of tenants

3.2.3.2. Interfaces / Standardization

As the physical hardware requirements depend on the actual uses cases to be served, there are ongoing efforts to standardize the hardware layout, most notably the Open Compute Project (OCP)[13] and Project19[14] which aim to define a cross-industry common server form factor, creating a flexible and economic data centre and edge solution for operators of all sizes.

There are a couple of typical choices for the cloud orchestration part of the IaaS stack including OpenStack[15], Apache Cloudstack[16] or Open Nebula[17]. Those solutions are open-source and especially OpenStack gained a lot of support in the operator community. Members of the OpenStack Foundation include AT&T, China Mobile, China Unicom, China Telecom and Deutsche Telekom.

Every IaaS Stack comes in different flavours and versions, OpenStack, for example, consists of more than 20 components. On the other hand, each IaaS stack has to serve its specific users and therefore a full harmonization seems to be unfeasible. Therefore it is the role of the upper PaaS layer to integrate different variations of the IaaS stacks available.

Recent efforts have been made to specifically standardize and create stacks that focus on the aspect of edge computing, e.g. StarlingX[18] that provides a fully integrated OpenStack platform with a focus on High Availability, Quality of Service, Performance and Low Latency.

In addition to open source IaaS stack solutions there are also commercial offerings such as Azure Stack. Those solutions can also be utilized in an end-to-end cloud AR/VR service stack as long as they can fulfil the specific requirements.

3.2.3.3. Solution space and best practices

For both the hardware and the IaaS software stack, two major choices have to be taken:

- Deployment model: self-deployment or 3rd party?

- Operational model: own operation or through a 3rd party?

For 3rd party deployment and operations – depending on the chosen IaaS stack – a large vendor landscape exists. While sourcing hardware infrastructure and IaaS stack from a single vendor may have certain benefits it can also create a lock-in for future updates which is not desired. Therefore, in general, ensuring that there is no dependency on a single vendor for both hardware and IaaS software deployment and/or operations is recommended.

3.2.3.4. Open Challenges

From an operator perspective deploying a distributed IaaS raises particular challenges including:

- Deployment of special hardware such as GPUs: operators typically have no experience with such type of hardware which results in lower buying power, operational challenges and basic infrastructure challenges like readiness of data centres in terms of power, air condition etc. Also, the current models to virtualize such hardware and thus utilize cloud economics are more challenging as operators won’t have users for GPU outside specific service initially. Finally, as the technological evolution of GPUs is very fast also a short replacement cycle has to be considered. Therefore it is important for the industry to create and engage in an ecosystem with use cases that are quickly scalable to understand detailed infrastructure requirements upfront and start monetization very quickly.

- Physical place of deployment: as outlined above the latency is directly impacted by the physical placement of an IaaS within the network. This implies that the closer infrastructure is deployed to the user equipment the lower the latency is. While this is true on the other hand deployment and operational cost are growing exponentially the further the IaaS is distributed (in its most extreme infrastructure attend to every cell tower of a mobile network). Therefore the actual placement of the infrastructure has to be carefully decided depending on the existing network topology, the breakout method, the physical assets such as space, power supply, air condition and physical security of the equipment and on the other hand the actual requirements for an AR/VR service to be fulfilled. This decision may also change over time depending on other changing factors in the end-2-end delivery chain such as decreasing latency on the access bearer (e.g. 4G LTE vs. 5G NR).

- Ownership model: while some operators may want to own and operate the IaaS by themselves in order to realize new monetization opportunities some others may not want to go down that path and let 3rd parties build IaaS in their own network. The decision very much depends on the operator’s abilities, resources, ambitions and overall strategy and therefore is a very individual one.

3.2.4. PaaS

The PaaS layer for cloud-based AR/VR is key to the adoption as it needs to provide the necessary capabilities as described in the AR/VR requirements section towards the application layer. In order to be attractive for AR/VR application developers, the PaaS needs to cover a relevant footprint in terms of user reach and also provide easy-to-use services relevant to the AR/VR application developer such as cloud-rendering or computer vision.

3.2.4.1. Technical Requirements

Depending on the specific use-case (e.g. AR vs. VR) and architecture (e.g. split rendering versus cloud-streaming) the PaaS layer needs to provide a wide range of different services. While specialized solutions may achieve higher efficiency, more generic approaches will have higher flexibility. The key requirements for a cloud-based AR/VR PaaS layer are:

- Provide dedicated AR/VR services utilizing the necessary IaaS capabilities (such as acceleration hardware) to fulfil the service requirements (e.g. 20 ms Motion-to-photon-latency for interactive VR services)

- Manage the discovery and provisioning of the underlying IaaS layer transparently to the application, i.e. make sure that proper infrastructure is available for the service being executed with no additional effort for the application developer

- In the context of mobile (nomadic) application usage, make sure that connectivity towards the infrastructure is seamlessly managed (e.g. cellular vs. WiFi)

- In the context of multi-user applications (e.g. multi-player AR games or multi-user VR CAD modelling) ensure the low-latency data exchange between multiple users

3.2.4.2. Interfaces / Standardization

As cloud-based AR/VR is just emerging, there are no standardized approaches yet.

Existing standardization efforts in the industry, like Khronos Group’s OpenXR[19]or the ETSI Industry Specification Group on AR Framework[20], are starting to look into cloud-based approaches.

3.2.4.3. Open Challenges

The key challenge is to balance between coverage (footprint in terms of users but also in terms of supported devices/applications), specific approach (i.e. design for specific rendering APIs versus generic remote rendering) and commercial viability. While the overall VR ecosystem is more mature and therefore suited to deploy first commercial viable solutions in the long-run the AR ecosystem will have more opportunities.

3.2.5. Software Enablers / AR/VR Platforms

3.2.5.1. Technical Requirements

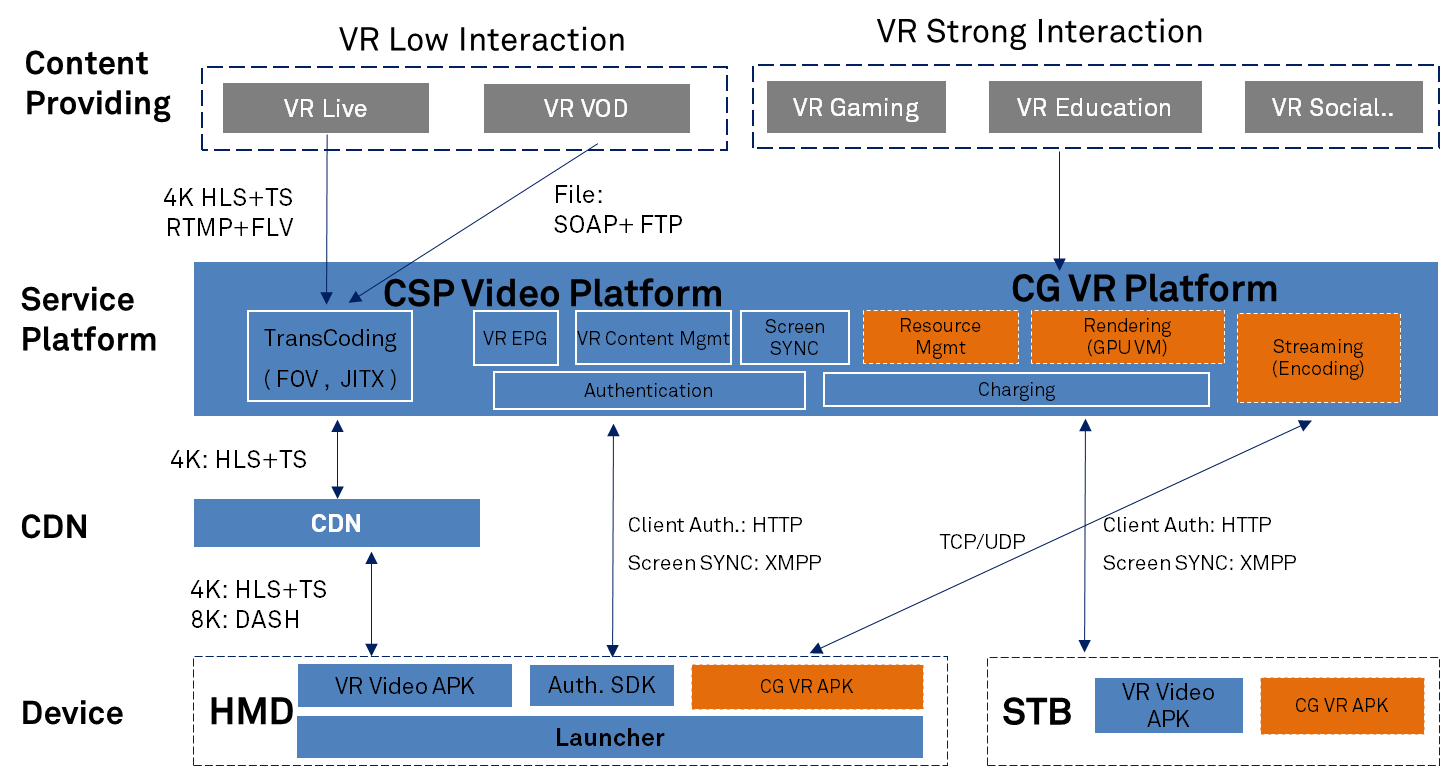

Many operators have already launched video-related services which include 360º video formats. In the initial stage of Cloud VR service deployment, we recommend that operators consider the legacy video service system and support the development of Cloud VR services by superimposing some capabilities.

For Cloud VR terminals, the early recommendation was to take the form of a stand-alone HMD. In the future, with the enhancement of the set-top box capability, the set-top box can be considered as a gateway to bridge with the HMD.

Fully utilize the existing CSP Video Platform and CDN system to support Cloud VR services, such as Cloud VR VOD, live, and so on.

- Upgrade the Electronic Program Guide (EPG) and Content Management System (CMS) to support VR content. The CMS needs to be configured to indicate the VR type and subtype of the content (such as 4K VR, 8K VR, etc.). The operator can push VR content promotion to the user through STB EPG (Electronic Program Guide), so the EPG needs to add VR program information accordingly. Usually, in the initial stage of VR service deployment, consumers select and view VR content through the UI of standalone VR HMD. STB EPG is only used for display and promotion.

- Add online/offline transcoding system to implement FOV slicing and DASH/HLS packaging and encapsulation function,

- Add the Screen SYNC model to show the same contents in STB and HMD; (XMPP)

- Integrate cloud rendering service and APK,

- Provide a unified client authentication interface and charging interface which can upgrade from existing components.

Figure 8. Solution space and best practices of Cloud VR FOV Solution

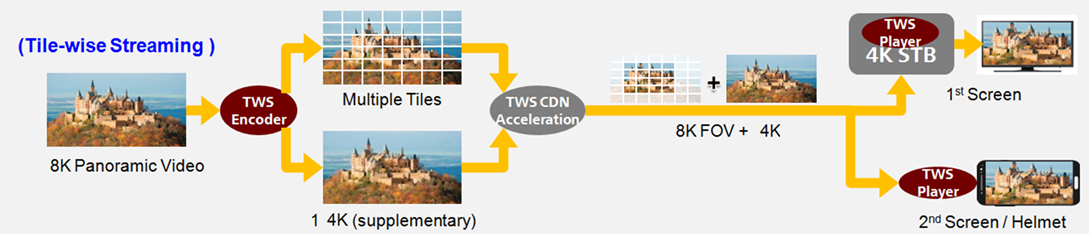

- Split the original 8K 360° panoramic video into multiple tiles (such as 42 tiles in the figure below) and generate a low-resolution 360° panoramic video (such as a 4K 360° panoramic video);

- All tiles and the low-resolution panoramic video are packaged in DASH using spatial relationship descriptors and distributed via the CDN;

- The client player retrieves low-resolution panoramic video and those tiles which are located at the user’s field of view. When the user turns around and causes the field of view to change, the client requests new tiles from the CDN for the new field of view. Because it requires a certain amount of time from when the new tiles are requested until they can be rendered, the low-resolution panoramic video will be displayed until the new tiles can be played.

Figure 9. Tile Wise Streaming

3.2.5.2. Technical Requirements

Cloud VR services are extremely sensitive to network latency. Users may experience dizziness if their viewing experience is repeatedly hindered by excessive latency. Therefore, it is essential to keep the motion-to-photon (MTP) latency to less than 20 ms. The end-to-end system latency also has to meet this target in order to maintain high image quality. The methods of clamping down on the latency of the Cloud VR network are as follows:

- terminal-cloud asynchronous rendering,

- low-latency encoding and decoding optimization, and

- service assurance with network slicing.

3.2.5.3. Asynchronous Terminal-Cloud Rendering

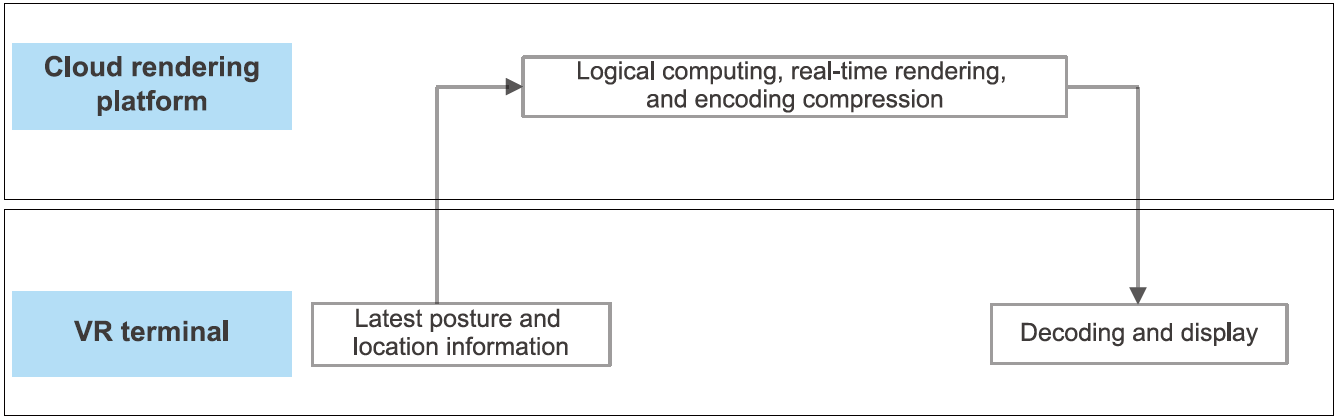

Cloud VR processing comprises of cloud rendering and streaming, as well as terminal display. During cloud rendering and streaming, a Cloud VR terminal captures motions and transmits the information over the network to the cloud. After logic computing, real-time rendering, and encoding on the cloud, the information is compiled into video streams, which are then transmitted to terminals for decoding.

It is already difficult to maintain low MTP latency (? 20 ms) as VR terminals have to go through a serial process of motion capture, logic computing, picture rendering, and screen display. The difficulty is made more insurmountable by the fact that VR cloud rendering and streaming also includes network transmission, encoding, and decoding. All these operations introduce further latency.

Figure 10. Serial processing: MTP latency prone to be higher than 20 ms

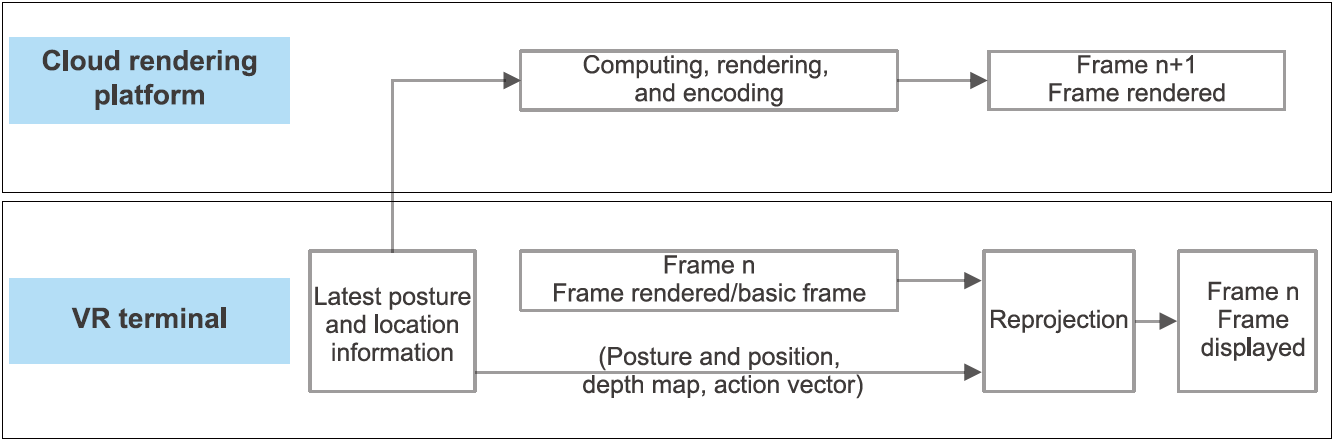

The fundamental idea of asynchronous terminal-cloud rendering is to enable parallel execution of cloud rendering and streaming, and terminal refreshing for display. That is, when VR terminal uses the nth frame sent by cloud rendering platform as the basic frame for projection, the cloud rendering platform right now is processing the (n+1)th frame at the same time. In this case, the MTP latency depends on terminals rather than the network transmission and cloud rendering, so that the MTP latency can be kept below 20 ms.

Figure 11. Parallel processing: MTP latency determined by terminals

Asynchronous terminal-cloud rendering can lower latency requirements on cloud rendering and network transmission. However, black edge, image quality deterioration, and other problems hindering user experience will occur if the rendering and streaming latency is not ideal. According to the industry’s test results, the latency should be below 70 ms. Currently, asynchronous rendering technology has been applied by China Mobile in its Cloud VR trial commercial use.

3.2.5.4. Low-Latency Codec Optimization

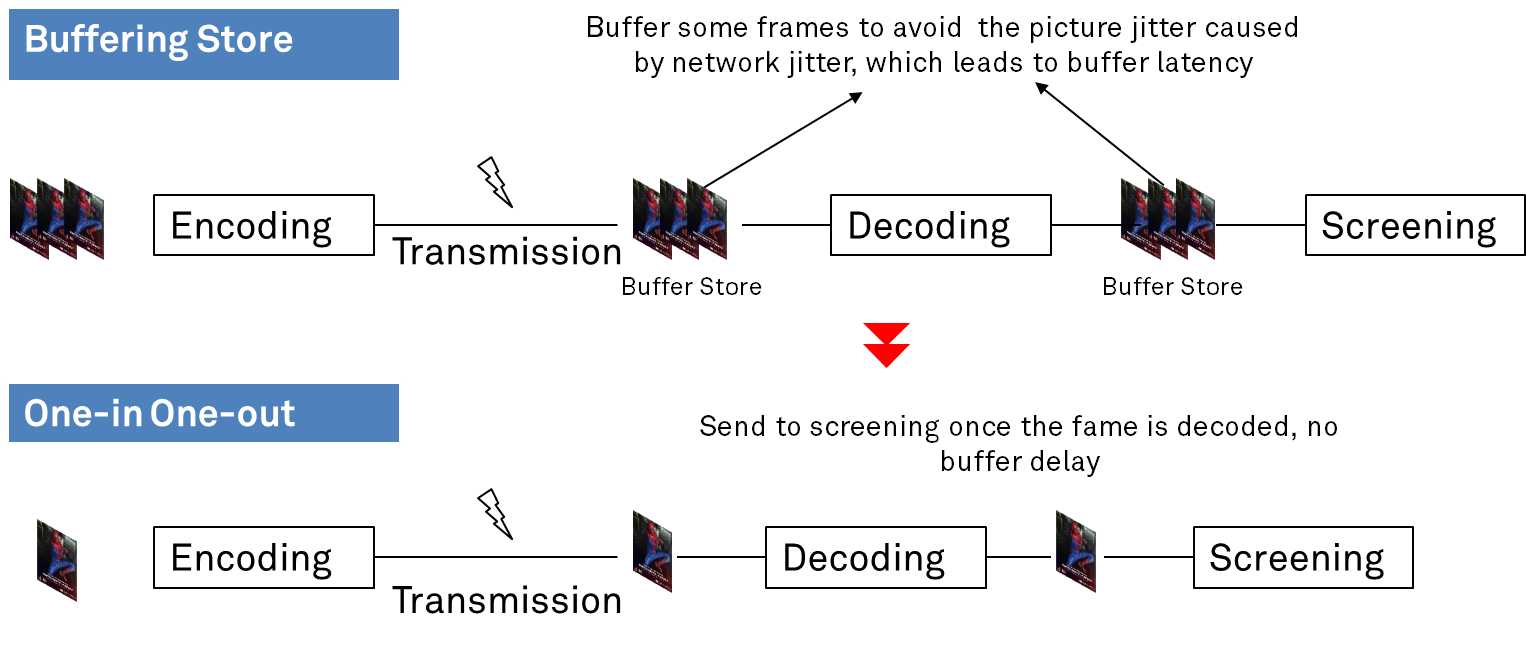

The long latency seen in the H.26x series of video codecs is mainly due to the following reasons.

- The encoding, transmission, and decoding are performed in a serial mode. In addition, only after the entire frame is decoded can it be delivered for terminal display.

- Jitter occurs during network transmission. In order to suppress screen flickering due to network jitter, a large buffer is used before decoding.

- The decoding time of different frames also jitters. Therefore, a certain amount of buffer is used after decoding to alleviate the screen flickering caused by the decoding time jitter. The latency as a result of network and decoding timing jitter tends to be longer than the decoding latency.

- The entire end-to-end system running status is also affected by software processing latency, such as the latency resulted between when the data is written and when it is exported from hardware/software, and the latency of audio-to-video synchronization.

In order to make the end-to-end latency as minimal as possible, codecs can support slice-wise encoding and decoding, and slice-wise transmission. As a result, multiple operations can be carried out simultaneously. In addition, it is advised not to select multiple reference frames or refer to backward frames when encoding structures are being selected. Data frames ought to be immediately displayed after decoding.

Figure 12. Slice-wise encoding

Figure 12. Slice-wise encoding

The network jitter should be minimized so that the coded slice data can reach the decoding chips at a stable interval. Note that the data to be decoded does not need to enter the buffering store to overcome network jitter. What’s more, the total decoding time at the decoding end is stable. In addition to ensuring that the slice transmission latency is stable, the deployed tools for controlling bit rate and encoding should be consistent. Otherwise, the decoding time of each slice will be affected and decoding buffers have to be utilized to ease the timing jitter in slice decoding.

Figure 13. One-in One-out

The improvement of traditional encoding technologies focuses on encoding and compression efficiency. With the advent of Cloud VR, new challenges of compression efficiency and latency are posed to the encoding technology. The low-latency codec optimization requires joint efforts of the industry. Leading vendors have begun to commercialize the One-in One-out technology. However, slice-wise encoding is still in an experimental phase.

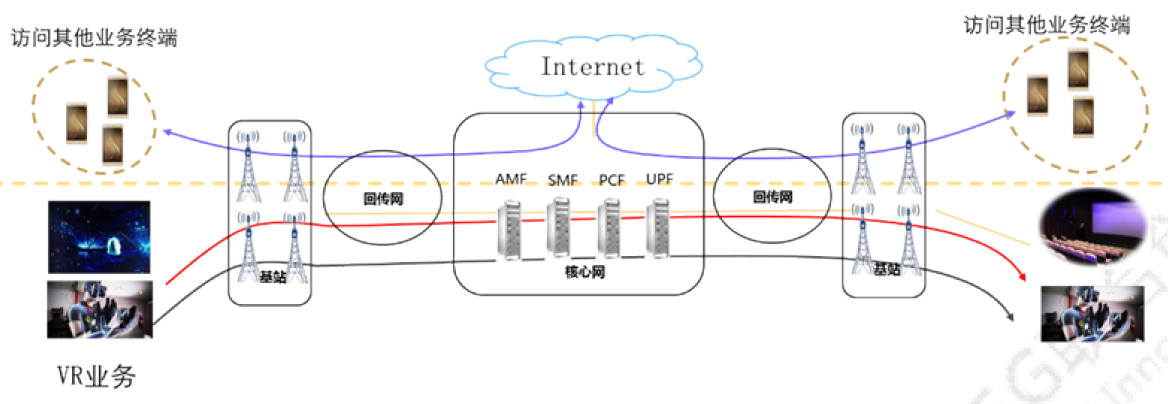

3.2.5.5. Service Assurance of Cloud VR Network Slicing

End-to-end Network Slicing can be used to support customized, environment- and scenario-specific end-to-end solutions. The technology allows independent resource allocation to terminals, radio access networks, transport networks, and core networks (CN) for different services. Dedicated network functions are also designed to provide isolated network services for the strong-interaction Cloud VR scenarios. In addition, the end-to-end network slicing management system also provides quality assurance services to monitor and automatically control the slice in real time. In this way, the performance indicators of Cloud VR services can be guaranteed.

Enabled by the network slicing technology, the common services and Cloud VR service streams run on different slice channels. When network congestion occurs, the bandwidth and latency conditions of the Cloud VR services are still intact, reducing the negative impact on user experience.

- The 5G core network isolates the forwarding plane of the Cloud VR from that of other services based on the network functions virtualization (NFV) and Linux Container technologies. Quality of service (QoS) assurance can be realized for CN forwarding.

- The RAN implements slice-based resource management and scheduling to guarantee QoS for Cloud VR services on the RAN side.

- The transport network can implement QoS guarantee through a dedicated transmission. In the future, on-demand Cloud VR transmission slicing can be realised with the enhanced transmission technology.

Figure 14. Isolation and assurance of end-to-end slicing services

3.2.6. Devices

Cloud-based VR terminals will adopt the same technologies as those common VR terminals in terms of display, interaction, and tracking. Meanwhile, they will become increasingly compact and lightweight, while the display technologies will evolve to provide more detailed images and wider viewing angles.

3.2.6.1. Natural Visual Experience Requirements

The human eye is capable of detecting approximately 60 pixels per degree. To bring the most realistic experience to users, the resolution of the 360° VR videos have to reach 21600 (360´60) dpi, and the resolution of the longitude and latitude after the mapping of the VR content is 21,600´10,800 dpi. If the view angle of the terminal is 90°, the image resolution should be 5400´5400 dpi. It is required that encoding and processing performance of VR terminals should at least support the display 5400´5400 resolution.

When the video frame rate is 90–120 fps, the human eye is oblivious to screen jitter. Therefore, the VR terminal should at least process 90 frames per second so that users will not experience dizziness or discomfort.

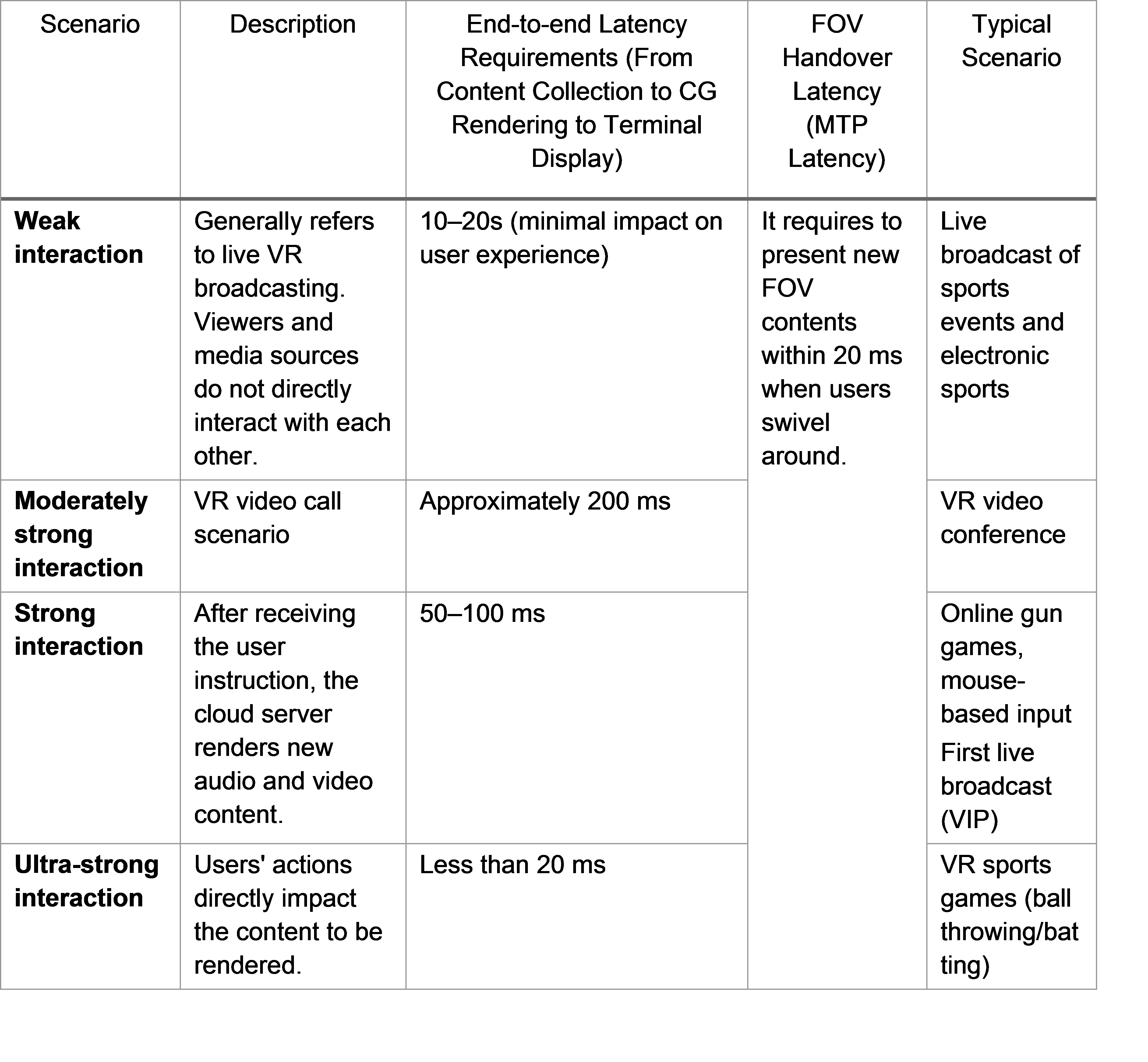

Table 4. Classification of VR interaction scenarios

Table 4. Classification of VR interaction scenarios

The preceding table indicates that the latency during the process from the delivery of interaction messages to the display of visual results varies in different scenarios. Currently, the latency requirements in weak- and moderately strong-interaction scenarios have been met in actual applications. However, in strong- and ultra strong-interaction scenarios, the end-to-end latency of existing systems cannot satisfy user experience requirements.

3.2.6.2. Terminal Decoding Requirements

Unlike common VR services, Cloud VR gaming or rendering is mainly performed on the server side. This means that GPUs on the terminal side do not need to be very powerful, as they focus on overlaying decoded video content. To ensure the display quality, the screen resolution of the entry-level VR terminal must be higher than or equal to 2560´1440 pixels. The refresh rate of the terminal screen should be no less than 70 Hz, and the FOV needs to be at least 90°.

At the moment, the chips embedded in the mobile terminals are capable of decoding 4K´2K@60P videos (Qualcomm: Snapdragon 845[21]). A large number of standalone VR terminals are also equipped with such chips to support dual-way decoding. As for 8K´4K@30P video decoding, only the NVIDIA graphics card in the PC and other few VR terminals currently support such a resolution. It is clear that the hardware decoding capability right now fails to provide a natural visual experience for users.

3.2.6.3. Locating and Tracking Requirements

The early VR is only capable of performing 3DoF tracking, that is, posture changes of the user’ head are perceived by sensors. However, the variation of head positions, which are indispensable to VR gaming and other applications, cannot be obtained by 3DoF tracing.

Therefore, to more accurately simulate the user’s perception, the 6DoF tracking mode is required. In addition, new VR interaction manners such as gesture tracking and electromyographic perception are likely to emerge in the future.

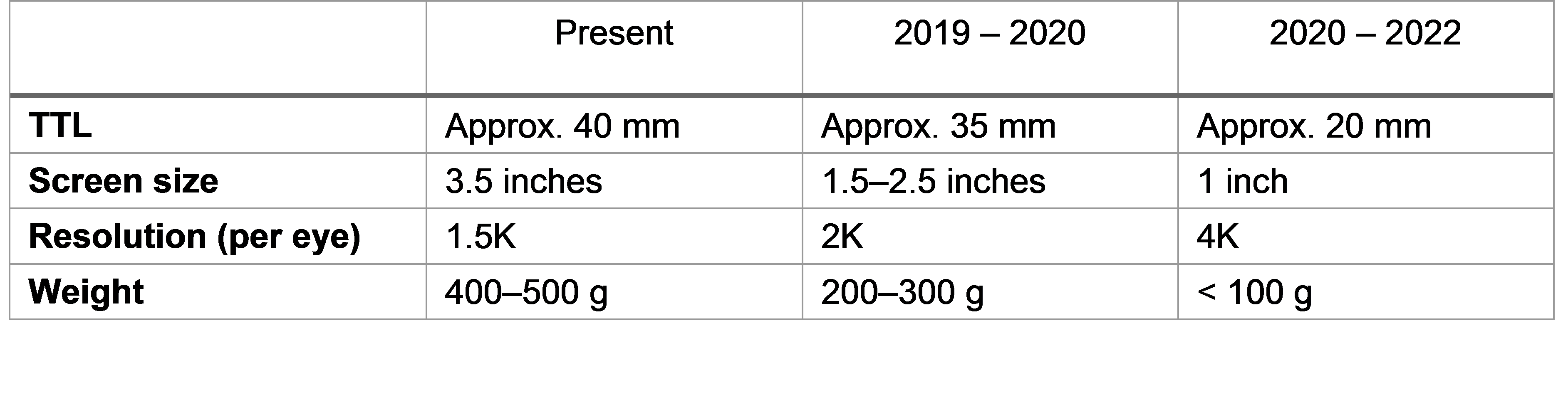

3.2.6.4. Terminal Physical Specifications

User comfort and experience and subject to the weight and size of the VR headset, while the headset design is affected by the display and optical imaging system. The current optical architecture adopts a traditional optical amplification mode, however, due to negative impacts (such as distortion and dispersion), the total tracking length (TTL) is relative long. Therefore, the size and weight of the VR headset cannot be reduced by using a smaller screen or a greater level of optical magnification. The industry also carries out the research and development of imaging systems including areas such as folded optics. It is believed that further breakthroughs in the weight and size of VR terminals will be made. The following table lists some key performance indicators of future VR terminals.

Table 5. Prediction of future VR terminal parameters

Table 5. Prediction of future VR terminal parameters

3.2.6.5. Terminal Communication Requirements

The Cloud VR system is highly sensitive to transmission latency. It not only demands high throughput but also requires low end-to-end latency. 5G networks featuring high data rate and low latency are suitable for carrying Cloud VR services. A new trend has risen in the VR industry that terminals integrate 5G modules and then take advantage of 5G networks to transmit data.

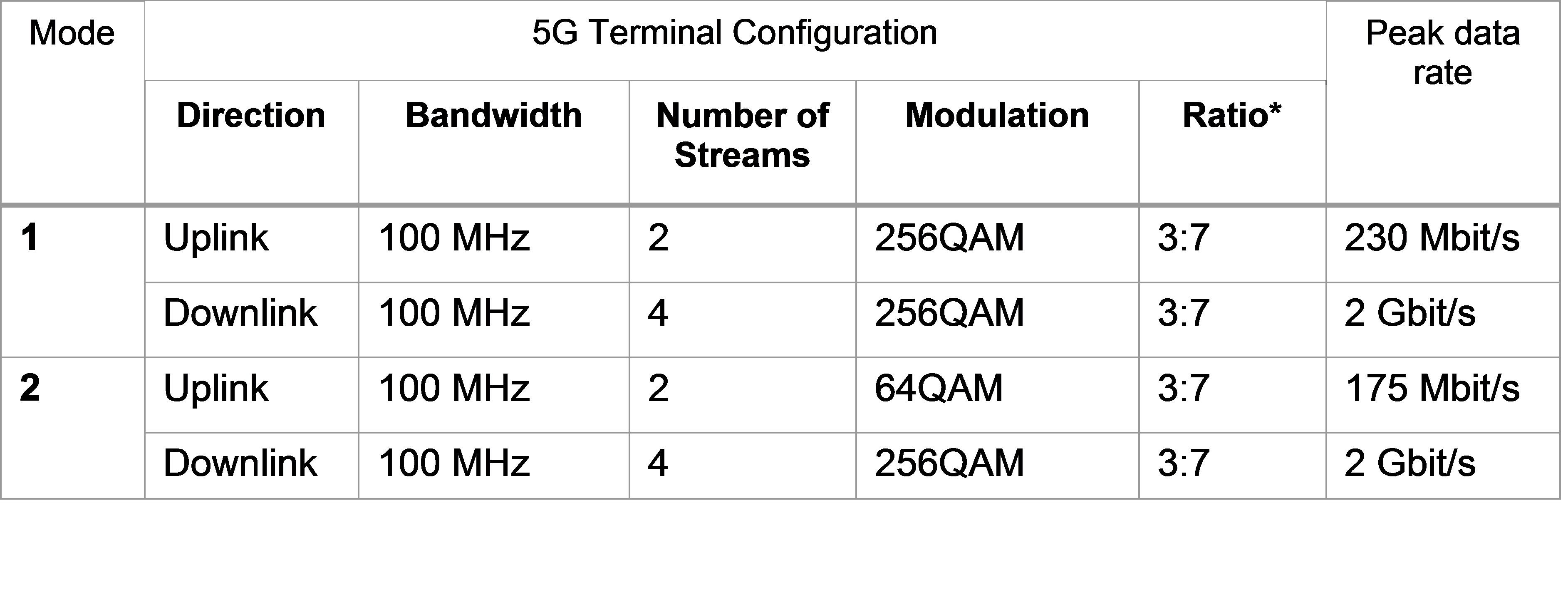

A number of aspects have to be considered for VR terminals. These include the peak data rate of the integrated 5G communications modules, 5G frequency bands, IP protocol stack, and the requirements posed by the integration of VR and 5G.

Table 6. Peak data rate of the 5G communications module

Table 6. Peak data rate of the 5G communications module

*Note: (Uplink/Downlink)

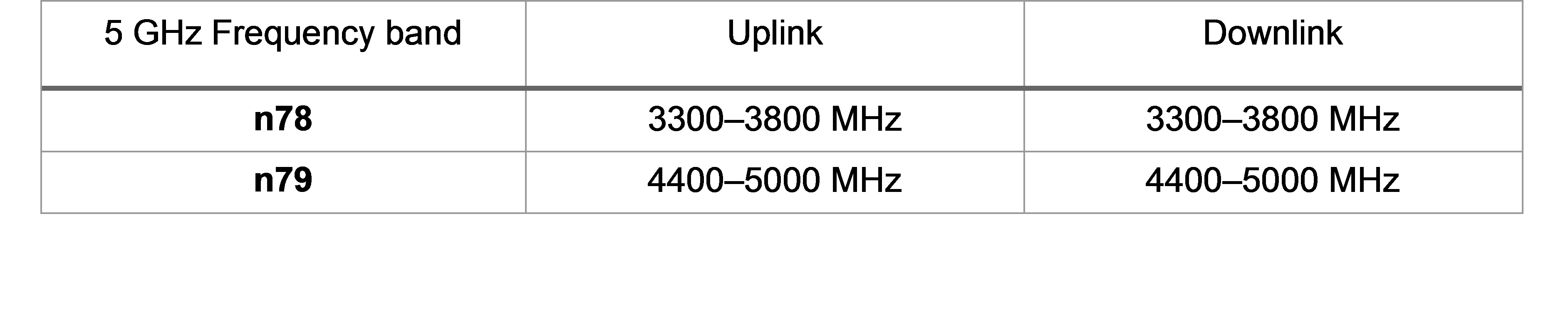

The 5G communications modules should at least support n78 and n79 frequency bands.

Table 7. Frequency requirements for the 5G module

Table 7. Frequency requirements for the 5G module

VR terminals mainly incorporate the 5G communications modules in two manners. One is directly using 5G chip modules for integration, which has high requirements on VR terminal design. The other is combining 5G communication modules via pluggable interfaces (min?PCle or M.2) or via wielding assembly (LCC or LGA). VR terminals can select either of these modules according to specific product requirements.

4. Ecosystem: Enabling the Cloud AR/VR ecosystem from the operators’ point of view

4.1. Cloud AR/VR Value Chain and Ecosystem overview

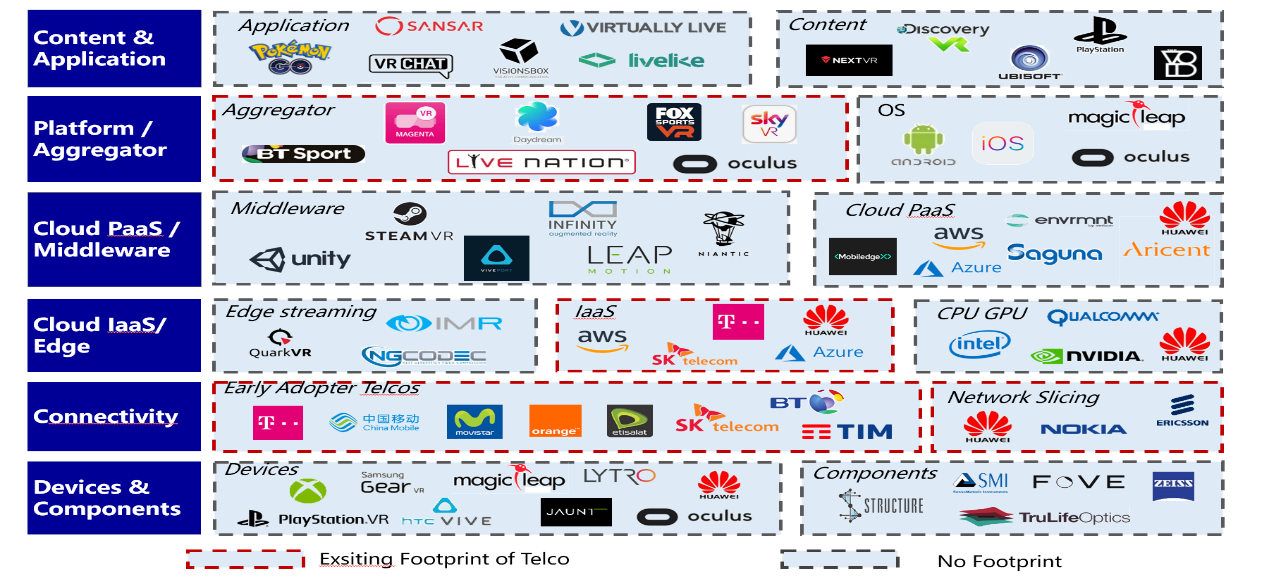

The AR/VR industry value chain has six distinctive segments.

Figure 15. Segments of the AR/VR industry

Components: Revenue from hardware will make up 50% of the overall AR/VR market. There are many components that make up the AR/VR devices, including CPUs, GPUs, eye-tracking, hand gesture tracking, optics, controllers and sensors. AR will require advanced machine vision capabilities, as well as processors optimized for other deep learning applications required by AR including prediction, voice recognition and face recognition.

Devices: VR head-mounted displays, AR glasses and 360° cameras, as well as AR/VR capabilities that are built into mobile phones. The speed of evolution of the market will be determined by how fast the industry can make the breakthroughs required in battery life, cooling, processor density and optics to deliver the form factors required, at mass-market price points.

Connectivity: Many of the best AR/VR services will be enriched by 5G networks. Streaming of 360° video will drive the need for bandwidth, and services, where video streams are augmented by mobile edge-hosted capabilities, will require very low round-trip latencies. The precise location will be required for many services including tourism, gaming and outdoor sports, while network slicing will provide the flexibility required to deliver the elastic capacity needs of augmented events.

Cloud Services IaaS: Cloud applications run on cloud infrastructure. Going forward the compute, storage and bandwidth requirements to process, store and render high-quality streams will increase demand for distributed telco cloud computing infrastructure that will be delivered by 5G. Telcos can play a role in this value chain by providing consistent, easy to consume and reliable distributed cloud infrastructure services.

Cloud Services PaaS and Middleware: Cloud-based PaaS and Middleware enable development organisations to focus more on user needs and business logic and less on the overhead of managing infrastructure and systems. Developers need seamless, consistent processes and interfaces to access telco cloud platform services anywhere in the world. These services could include last mile CDN, rendering, encoding, and time-critical AI services. They will most likely settle on a handful of PaaS and Middleware platforms. Operators with their own platform services will have a strong competitive advantage in the AR/VR space if they can find mechanisms to make their consumption seamless, consistent and scalable.

Platform/Aggregator: The VR gaming market has traditionally been dominated by HTC/Valve, Oculus from Facebook and Sony PlayStation. In mobile AR the major operating environment battle is between ARKit[22] from Apple and ARCore[23] from Google, with the expectation being that this battle will continue with the introduction of augmented reality and mixed reality (MR) glasses, with the possible addition of Microsoft[24], Meta[25] and Magic Leap[26]. 3D engines are a key application enabler technology, where platforms from Unity[27], Epic Games Unr