Submer: Immersion Cooling for Research and Colocation

Submer presents the SmartPodX, the very first commercially available OCP compatible Immersion Cooling system, that conforms to both standard server formats and to Open Compute Project (OCP) specifications for high-performance, supercomputing, hyperscale infrastructures, research and colocation:

- Up to 100 kW of heat dissipation

- 45U or 22U, 19” or 21” Open Compute (OCP)

- Local and remote management interfaces

- Simplified maintenance

- Submer Cloud (remote monitoring & management)

- DCIM API – integrate with monitoring tools

- Compact form factor

- Tier III and Tier IV compatible

- Vertically Stackable configurations available

- Optional IP65 (water/dustproof)

Submer Technologies is partnering with 2CRSi to deliver High-Performance Computing (HPC) environments to the EU Joint Research Center in Ispra, Italy.

The Joint Research Centre (JRC) is the largest European Commission facility outside of Brussels and Luxembourg. The facility is one of Europe’s most essential research campuses spanning many scientific disciplines and laboratories for governmental, scientific, and private projects.

In a collaborative effort with Airbus, Submer Technologies and 2CRSi – a leading international supplier of HPC servers – are providing supercomputer-speed processing in high-density clusters to support real-time workloads for advanced Artificial Intelligence (AR) cybersecurity research applications at the JRC campus.

Submer’s advanced immersion cooling systems utilise an environmentally safe, dielectric cooling fluid that surrounds the computing hardware to deliver cooling power one-thousand times more efficient than air. Meaning that the 2CRSi can deploy more CPU and GPU clusters closer together in high-density configurations while protecting vital components from thermal and environmental risks and cutting total electric consumption by up to 50%.

Importantly, the entire installation is built using component standards set by the Open Compute Project (OCP) for hyperscale infrastructures. The 2CRSi servers will contain the latest, high-density configurations of Intel and Nvidia processors powered by LiteOn power-shelf rectifiers. The Submer SmartPodX was explicitly designed to support and perform under OCP specifications.

The collaboration between Submer and the other OCP members represents a considerable step forward in data centre design. The SmartPodX has been designed to address physical space and energy compliance while delivering high-density compute environment otherwise impossible to achieve with the same efficiency with an air cooling system.

Among the goals of the projects, the installation of the SmartPodX at the Joint Research Centre (JRC) will deliver:

- Immersion Cooled GPU accelerated AI HPC

- Limited OPEX/CAPEX budget

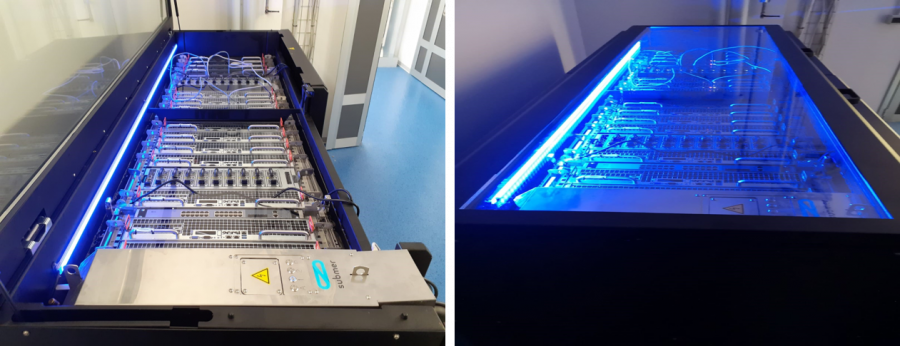

Figure 1. SmartPodXL installed at JRC

Introduction

In 2015, Daniel Pope and Pol Valls founded Submer Technologies. The creation of the Company, with offices, R&D lab and manufacturing plant located in Barcelona, answered a precise need and intuition: the necessity to tackle the datacentre business from a new angle, creating a highly efficient solution that would allow datacentres to exponentially reduce costs related to cooling while respecting the environment. An Immersion Cooling Solution, to be precise, not just for now, but for the future. And that solution is called SmartPod.

Pol and Daniel had the dream to make operating and constructing datacentres more sustainable, and so they started to assemble a multi-disciplined world-class team bringing together skills and experience in datacentre operations, thermal engineering, HW & SW development, service delivery and innovation management.

Submer is changing how datacentres are being built from the ground up, to be as efficient as possible and to have little or positive impact on the environment around them (reducing their footprint and their consumption of precious resources such as water).

Business Imperative

The new digital trends (SmartCities, 5G, IoT, Machine Learning, Deep Learning, AI, Edge, etc.) that are transforming the society and will shape the social, urban and economic landscape have all something in common: they require to process an ever-growing amount of data. In this scenario, it is impossible to think about a future without datacenters. The data centre industry is now facing an unsolvable problem: how is it possible to keep on growing, to scale in an economically sound way? And how is it possible to achieve that goal by adopting a sustainable approach, so that the economic growth does not contradict the respect and the defence of the environment?

The LIC technology by Submer has been conceived with that questions in mind, to find a technology that would help our customers to scale their business and, at the same time, give something back in return to the community and to the world where we live and work.

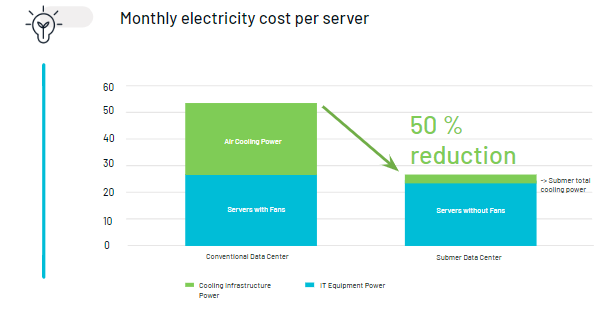

In a conventional data centre, approximately 40% of the electricity is used for the cooling system. The SmartPodX allows to save up to 95% of cooling costs (corresponding to about 50% of the electricity consumption) and up to 85% of physical space. In this way, the impact on the environment of a data centre (or HPC) adopting our technology is definitely smaller (or even positive) than a data centre (or HPC) using a traditional air-cooling system. Submer’s LIC technology also relies on a proprietary coolant (SmartCoolant) that is 100% non-hazardous for people or the environment and biodegradable.

Submer Immersion Cooling and the SmartPodX

In 2018, Submer came out of R&D and brought the SmartPod Immersion Cooling system to market — delivering unprecedented levels of computing efficiency to the datacentre:

- Power Usage Effectiveness (PUE) ratios of 1.03 or better – 25x to 30x more efficient

- Compute densities exceeding 100 kW – 10x to 20x higher per rack footprint

- IT Hardware lifespan increase of 20% to 50%

In 2018, at Mobile World Congress (MWC) in Shanghai, Submer was awarded the 4YFN best IoT Startup of the year. The award was just the latest significant achievement for Submer, in chronological order, that followed other two landmark successes: the partnership with ETP4HPC, and the strategic collaboration with CERN (the European Organization for Nuclear Research. In this case, the SmartPod was installed and tested at the LHCb – Large Hadron Collider beauty – experiment, one of the seven particle physics detector experiments collecting data at the LHC accelerator at CERN).

In 2019, Submer released the SmartPodX, the very first commercially available OCP compatible Immersion Cooling system at the Open Compute Global summit in San José, California.

How does it work?

Every object charged with electricity generates heat. When the processor is not adequately cooled, the electric object becomes too hot, and this can cause performance to slow down until, in some cases, irreparable damages. The same concept applies to IT Hardware in a data centre. Therefore, cooling is a fundamental aspect of managing and operating a data centre efficiently and productively.

The SmartPodX is a “data centre in a box”. In traditional data centres, the racks are in a vertical position. In the SmartPodX, the rack is horizontal. In this way, the operators can submerge the servers into the tank, cooling the IT Hardware with the SmartCoolant (a proprietary fluid 100% non-hazardous for people or the environment and biodegradable). The SmartCoolant is then cooled by the secondary loop system (this process happens in a heat exchanger placed in the Coolant Distribution Unit, i.e. the “brain” of the SmartPodX) that finally brings the warm water to an external chiller (dry cooler or adiabatic cooling tower). And then the process starts again, with the cold water pumped in, the exchange of heat between SmartCoolant and water and so on. This is just one step of the whole process that allows dissipating>100kW in a 45U SmartPodXL configuration.

The SmartPodX brings two additional innovations to market at the same time.

Cool-Swap: When an engineer replaces drives — or even entire servers — without interrupting operations, it’s called a “hot swap.” The SmartPodX is the first Immersion Cooling system to place the Cooling Distribution Unit (CDU) inside the fluid as a modular component. It is essential because the CDU becomes quickly replaceable if needed without interrupting the servers at all.

The SmartLift: The SmartPodX is built to seamlessly integrate with our SmartLift server lift. And it’s going to change the Immersion Cooling landscape. The SmartLift runs on a dedicated rail system along the front of your entire SmartPod installation — allowing engineers and technicians to access computers and servers easily for maintenance. It also works with innovative CDU.

Among other things, these innovations will bring immediate benefits today and set the stage for automated systems, self-healing data centres, and the new age of Edge and remote computing environments to come.

Apart from being a highly efficient solution for data centres and HPC, the SmartPodX represents a clear example of how it is possible to scale the digital business in complete harmony with the environment (the coolant we use is 100% biodegradable, and our system operates with a closed water loop, so there is no waste of water).

With the SmartPodX, Submer will take compute densities and extreme power efficiencies to a broader market and allow for the creation of the world’s first green supercomputers.

Due to its modular and compact design, and the sealed lid that allows perfect isolation from the atmosphere (when it is closed), the SmartPodX can be placed anywhere (indoor or outdoor). For this reason, especially in an Edge configuration or in a smart scenario, Submer’s cleantech offers the advantage of reaching unrivalled IT Hardware densities in a limited space and also very near to the customer (with the obvious, clear benefits in terms of latency). Implementing one of Submer’s solutions (either the SmartPodX or one of our next generation containerised data centres) means having at heart not just your own business, but also the environmental and urban context where our LIC solutions are deployed considering the high level of integration into pre-existing infrastructures.

Submer delivers complete and autonomous Immersion Cooling solutions (Next-Generation Datacenter) in a containerised form, but also LIC solutions that can be deployed in a facility already existing. In this case, the implementation of Submer’s solutions requires a minimum retrofitting of the facility. The simple prerequisites necessary for the SmartPodX to work adequately are; water, electricity and Internet connections. Submer’s solution also requires a secondary loop system, a secondary water system to bring the water into the heat exchanger, where the heat is removed. The SmartPodX can easily integrate with any dry cooler or adiabatic cooling system already on-site or purchased alongside the Immersion Cooling solution.

Economic Benefits

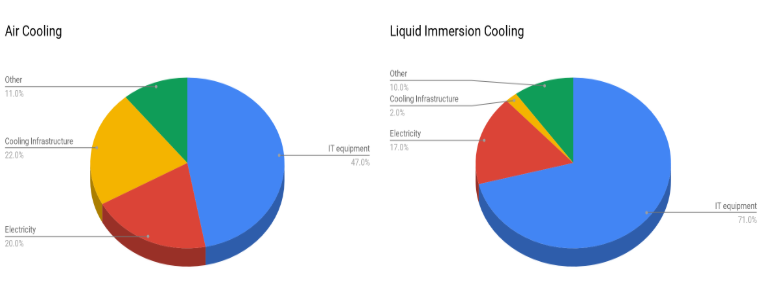

The following table explains the economic benefits of Submer’s Immersion Cooling solution compared to air cooling:

Two data centres: – one air-cooled and one immersion-cooled by Submer.

- PUE Submer: 03

- Power consumption per server: 1kW

- Number of servers: 200

- Total kWh consumer by servers operating 24/7: 144,000 kw

- kWh Price: 13 € (it can change according to the country)

- Server Fan Removal Savings: 00% (actual values up to 25%)

Given these conditions, in case of a 300 kW power consumption, we would have the following result:

| Air-based 300 kW Power Consumption | Submer 300 kW Power Consumption |

| Servers consumption: 144,000 | Servers consumption: 122,400 |

| Cooling consumption: 128,160 | Cooling consumption: 3,672 |

| TOTAL: 272,160 kWh per month | TOTAL: 126,072 kWh per month |

Monthly Electricity Cost Air-Based solution (having in mind the price of 0.13€)

| Servers (air-cooled): 19,153 € | Servers (Submer): 16,279 € |

| Cooling (air-cooled): 17,045 € | Cooling (Submer): 488 € |

| TOTAL (air-cooled): 36,197 € per month | TOTAL (Submer): 16,768 € per month |

The difference between air cooling and Submer LIC: 19,430 € per month

Submer guarantees 50% reduction on CAPEX and 45% reduction on OPEX where the cooling cost OPEX is 30W per kW with immersion cooling vs 670W per kW with air cooling, according to the latest reported PUE from Uptime Institute of 1.67).

JRC decided to adopt Submer’ solution, knowing that it can guarantee:

LIC &OCP: >50% CAPEX savings vs traditional

- LIC: economical build, more density and future proof investment

- OCP: economical HW (further 8% hardware savings)

LIC & OCP: >45% OPEX savings

- OCP simplifying overall operations and maintenance

- European Commission is embracing “Open Standards”

Submer has designed Immersion Cooling solutions to meet the needs of any customer operating in any field where high computational densities are needed: from research to oil and gas, telco to supercomputing, mobile to even crypto mining, and medical applications to Edge solutions.

Behind the necessity of improving the cooling system switching from air to liquid, there is a specific situation that cannot be ignored or underestimated any longer: it’s getting hotter and harder for datacenters and supercomputing to remain cool. Advancements in high-performance computing continue to contribute to increasing temperatures. Following 50+ years of incrementalism of mechanical ventilation in the data centre space, the demands of modern infrastructure have begun to surpass the capabilities of the traditional air-cooled rack. The ramifications of this critical crossroad present both fundamental challenges and opportunities when exploring the question: What does a next-generation integrate modular infrastructure look like without the restraints of air cooling?

Submer offers solutions that are adaptable to any geographical conditions and that guarantee the following benefits:

Figure 3. Electricity cost reductions

In terms of electricity consumption, any operator can see immediate benefits starting from the first month of implementation of Submer’s solutions:

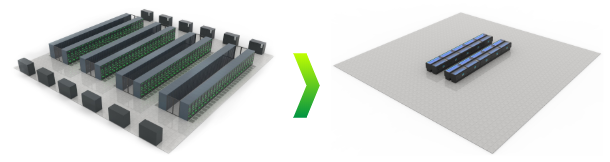

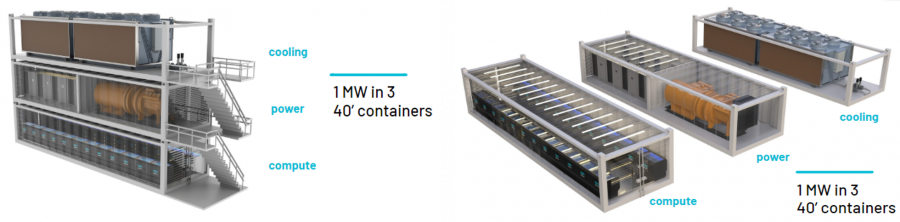

Submer not only guarantees savings on energy consumption but also on the physical space that a datacenter would occupy. In the following example, you can see the visual rendering of a 2MW data centre air-cooled (on the left) and immersion-cooled with Submer’s SmartPodX (on the right):

Figure 4. Space reduction with SmartPodX

This translates into 85% less space needed.

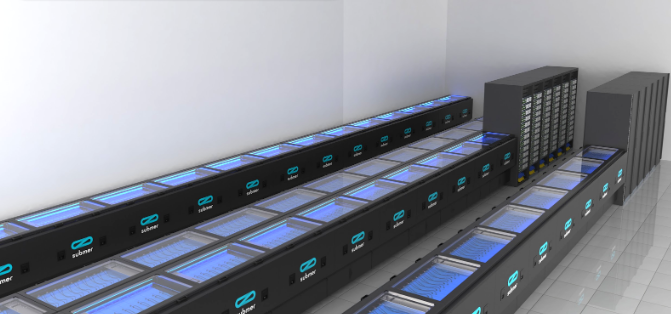

The design of Submer solutions allows a different configuration within an entirely new facility or in a pre-existing facility and also alongside air-cooled racks.

The SmartPodX is an autonomous, all-in-one solution that can be deployed easily in a containerised datacenter/Edge scenario.

Given these characteristics, it is possible, to sum up, the benefits of Submer’s Immersion Cooling solutions as follows:

- 95% reduction in cooling OPEX.

- ROI <1 year.

- 85% increase in computing density (Dissipation capacity of over 100 kW in the space of two standard racks).

- 50% reduction in CAPEX build costs (Rapidly deployable in raw space without the need for raised floors. Minimum retrofitting required for existing DCs).

- 30% increase in hardware lifespan and 60% reduction in hardware failure (No moving parts, no dust particles, no vibrations, less thermal and mechanical stress due to the uniformity provided by the liquid and its viscosity).

- 99% Heat captured in the form of warm water (Allows for unprecedented Energy Reuse Effectiveness if DC’s are built close to communities or industry. New revenue streams.)

- >60% reduction in management and operational procedures (at Submer, we think that in a not so distant future, robotics will combine with Immersion Cooling and modular designs delivering solutions to be deployed anywhere between the Edge and Hyperscale. We will be able to offer our customers an even better structured and organic offer, integrating software resiliency with physical layer resiliency, guaranteeing unprecedented IT Hardware density and efficiency and also facilitating energy reuse programs.)

- Zero waste of water.

- 25%-40% saving on TCO.

Implementing the Solution

The modular and compact design of the SmartPodX guarantees a fast deployment with Edge ready solutions, to bring compute where is needed. The technology developed by Submer allows to reach unprecedented IT density and achieve a PUE <1.03 in any part of the world (also in those regions with more extreme climate conditions that in normal circumstances would affect the efficiency of any data centre).

Due to its highly adaptable nature, the SmartPodX is deployable in many contexts where high computational capacities are needed (with the obvious benefits of helping save up to 50% on the electricity consumption and no waste of water): from research laboratories to oil and gas industry, colocation centres to high-performance computing, sport and entertainment industry (F1 races) to international space programs, government agencies to more traditional data centres. In an urban context, for example, the LIC technology by Submer can also be used to actively improve the energetic consumption of cities by promoting solutions that reuse the heat produced by the IT Hardware cooled in our SmartPodX to heat surrounding urban or industrial areas.

The SmartPodX is the definitive cleantech that is meant to change the data centres strategy, helping the industry to shift towards a safer, more sustainable and more efficient future.

Finally, the system of the SmartPodX has been designed to be also installed in pre-existing facilities (with a minimum retrofitting process) and by functioning with zero waste of water (it operates with a closed water loop).

Since the launch of the SmartPodX, Submer has been actively partnering with other OCP members such as 2CRSI, Lite-On, Gigabyte and more with the goal of presenting and deploying full OCP solutions (cooling system + cables system + switches + IT Hardware) able to deliver unprecedented IT Hardware densities to achieve:

- Unrivalled computational capacities

- Enormous benefits for the economy of our clients

- A minimum or positive impact on the environment

High energy efficiency

Figure 5. Timeline of the project

The reason behind the decision of the customer to adopt an Immersion Cooling solution was the complexity of cooling large amounts of 8 GPU nodes in air. Hence, the decision to use a cooling solution x1000 more efficient (in terms of heat dissipation) than air.

The project had the following goals:

- Immersion Cooled GPUs for accelerated AI HPC

- Limited OPEX/CAPEX budget

- Greenfield facility imposing space restrictions

- Highly secure environment

- Challenge to cool efficiently in the air (>2kW per node)

- Not considering OCP

- Energy reuse

Figure 6. Typical budget allocation ratios

Adopting Submer’s Immersion Cooling solution allows you to save on your budget and invest the money saved in more IT Hardware. Translating into more IT hardware density and consequent computational capacity.

The solution proposed to JRC had the following configuration.

- SmartPodXL 42OU

- 2CRSI Octopus 1.8b 2OU

- Lite-On OCP power shelf

- FS Fanless switches

Challenges and Lessons learned

Our customer had a specific need (widespread in the HPC industry): to increase compute density in a limited space, to improve performance without the restrains of air-cooling. Following 50+ years of incrementalism of mechanical ventilation in the data centre space, the demands of modern infrastructure have begun to surpass the capabilities of the traditional air-cooled rack. That was the main goal and, at the same time, the challenge of this project.

Submer, with its SmartPodXL technology, was able to help JRC achieving its goal, delivering:

- 100kw+ per rack; Stackable to 25kw+ per square/foot

- Smaller server hardware for greatly increased quantity per rack

- PUE <1.03

- One hr+ thermal battery

Conclusion

As a result of this project, Submer Technologies and 2CRSi signed a very high-performance server contract for a European anticrime agency with Airbus. The deal was announced during last GITEX 2019 in Dubai.

The news was covered by the international press, as shown here below:

- http://saudigazette.com.sa/article/579266/BUSINESS/Airbus-taps-2CRSi-for-calculation-servers

- https://www.tahawultech.com/video/2crsi-submer-and-airbus-partner-to-accelerate-innovation/

- https://insidehpc.com/2019/09/immersive-cooling-from-submer-technologies-coming-to-eu-joint-research-center-in-italy/

The GSM Association (“Association”) makes no representation, warranty or undertaking (express or implied) with respect to and does not accept any responsibility for, and hereby disclaims liability for the accuracy or completeness or timeliness of the information contained in this document.